KoreanFoodie's Study

Solutions to Linear Algebra, Stephen H. Friedberg, Fourth Edition (Chapter 5) 본문

Solution Manuals/Linear Algebra, 4th Edition: Friedberg

Solutions to Linear Algebra, Stephen H. Friedberg, Fourth Edition (Chapter 5)

GoldGiver 2019. 6. 15. 13:18

Solution maual to Linear Algebra, Fourth Edition, Stephen H. Friedberg. (Chapter 5)

Solutions to Linear Algebra, Fourth Edition, Stephen H. Friedberg. (Chapter 5)

Linear Algebra solution manual, Fourth Edition, Stephen H. Friedberg. (Chapter 5)

Linear Algebra solutions Friedberg. (Chapter 5)

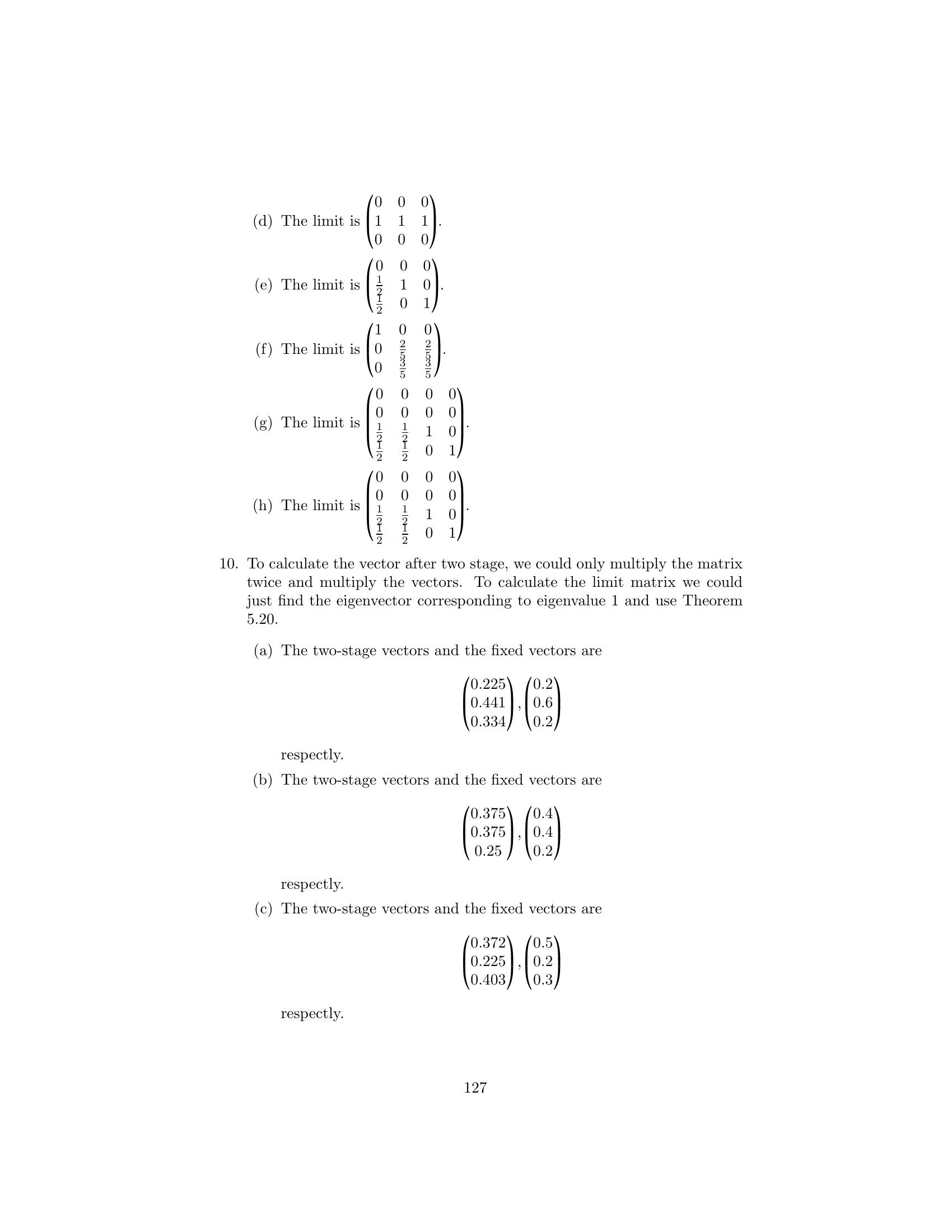

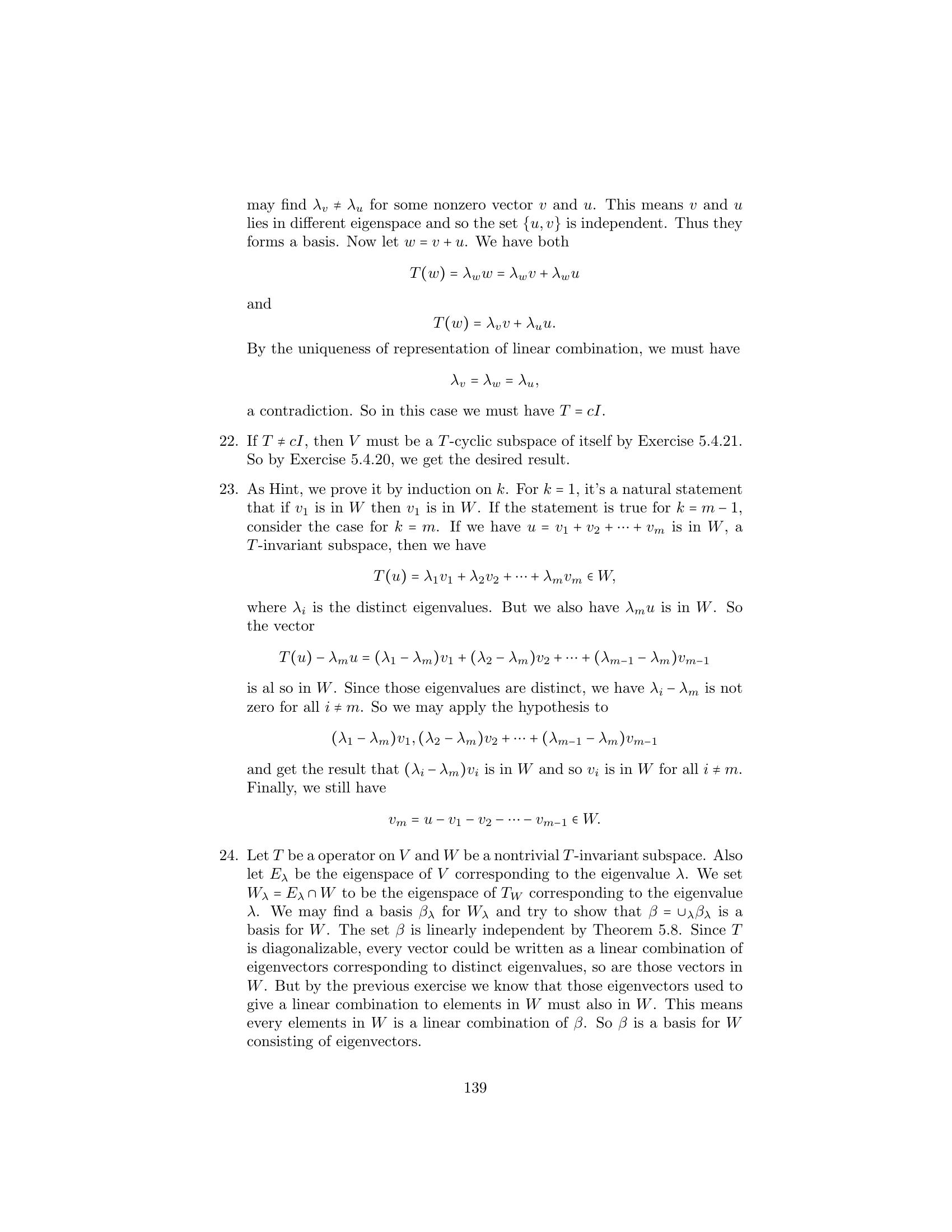

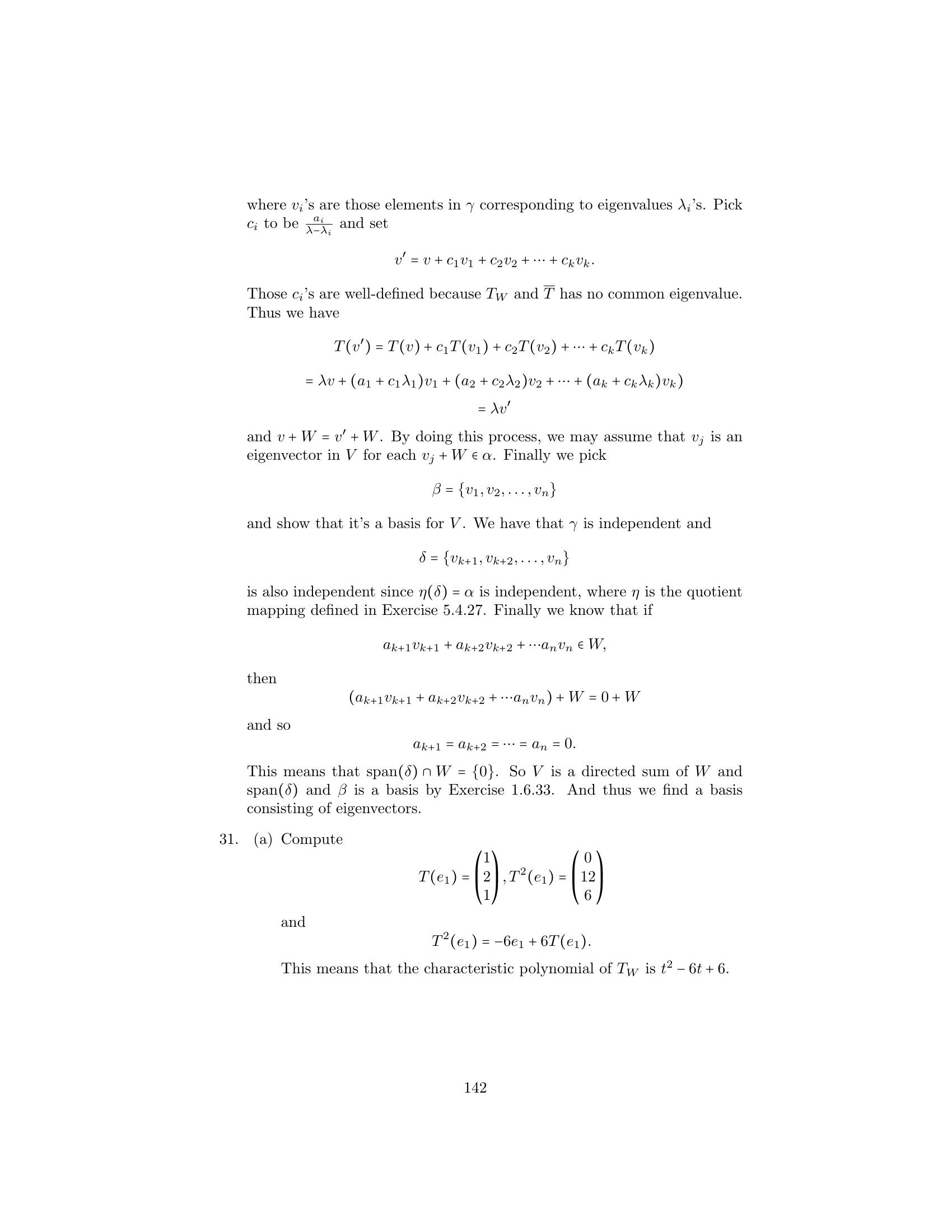

1. Label the following statements as true or false. (a) Every linear operator on an n-dimensional vector space has n dis- tinct eigenvalues. (b) If a real matrix has one eigenvector, then it has an infinite number of eigenvectors. (c) There exists a square matrix with no eigenvectors. (d) Eigenvalues must be nonzero scalars. (e) Any two eigenvectors are linearly independent. (f ) The sum of two eigenvalues of a linear operator T is also an eigen- value of T. (g) Linear operators on infinite-dimensional vector spaces never have eigenvalues. (h) An n × n matrix A with entries from a field F is similar to a diagonal matrix if and only if there is a basis for Fn consisting of eigenvectors of A. (i) Similar matrices always have the same eigenvalues. (j) Similar matrices always have the same eigenvectors. (k) The sum of two eigenvectors of an operator T is always an eigen- vector of T. 2. For each of the following linear operators T on a vector space V and ordered bases β, compute [T]β , and determine whether β is a basis consisting of eigenvectors of T. 2 a 10a − 6b 1 2 (a) V = R , T = , and β = , b 17a − 10b 2 3 (b) V = P1 (R), T(a + bx) = (6a − 6b) + (12a − 11b)x, and β = {3 + 4x, 2 + 3x} ⎛ ⎞ ⎛ ⎞ a 3a + 2b − 2c (c) V = R3 , T ⎝ b ⎠ = ⎝−4a − 3b + 2c⎠, and c −c ⎧⎛ ⎞ ⎛ ⎞ ⎛ ⎞⎫ ⎨ 0 1 1 ⎬ β = ⎝1⎠ , ⎝−1⎠ , ⎝0⎠ ⎩ ⎭ 1 0 2 (d) V = P2 (R), T(a + bx + cx2 ) = (−4a + 2b − 2c) − (7a + 3b + 7c)x + (7a + b + 5c)x2 , and β = {x − x2 , −1 + x2 , −1 − x + x2 } Sec. 5.1 Eigenvalues and Eigenvectors 257 (e) V = P3(R), T(a + bx + cx2 + dx3 ) = −d + (−c + d)x + (a + b − 2c)x2 + (−b + c − 2d)x3 , and β = {1 − x + x3 , 1 + x2 , 1, x + x2 } a b −7a − 4b + 4c − 4d b (f ) V = M2×2 (R), T = , and c d −8a − 4b + 5c − 4d d 1 0 −1 2 1 0 −1 0 β = , , , 1 0 0 0 2 0 0 2 3. For each of the following matrices A ∈ Mn×n (F ), (i) Determine all the eigenvalues of A. (ii) For each eigenvalue λ of A, find the set of eigenvectors correspond- ing to λ. (iii) If possible, find a basis for Fn consisting of eigenvectors of A. (iv) If successful in finding such a basis, determine an invertible matrix Q and a diagonal matrix D such that Q−1 AQ = D. 1 2 (a) A = for F = R 3 2 ⎛ ⎞ 0 −2 −3 (b) A = ⎝−1 1 −1⎠ for F = R 2 2 5 i 1 (c) A = for F = C 2 −i ⎛ ⎞ 2 0 −1 (d) A = ⎝4 1 −4⎠ for F = R 2 0 −1 4.For each linear operator T on V, find the eigenvalues of T and an ordered basis β for V such that [T]β is a diagonal matrix. (a) V = R2 and T(a, b) = (−2a + 3b, −10a + 9b) (b) V = R3 and T(a, b, c) = (7a − 4b + 10c, 4a − 3b + 8c, −2a + b − 2c) (c) V = R3 and T(a, b, c) = (−4a + 3b − 6c, 6a − 7b + 12c, 6a − 6b + 11c) (d) V = P1(R) and T(ax + b) = (−6a + 2b)x + (−6a + b) (e) V = P2(R) and T(f (x)) = xf (x) + f (2)x + f (3) (f ) V = P3(R) and T(f (x)) = f (x) + f (2)x (g) V = P3(R) and T(f (x)) = xf (x) + f (x) − f (2) a b d b (h) V = M2×2 (R) and T = c d c a 258 Chap. 5 a b c d (i) V = M2×2 (R) and T = c d a b (j) V = M2×2 (R) and T(A) = At + 2 · tr(A) · I2 Diagonalization 5. Prove Theorem 5.4. 6.Let T be a linear operator on a finite-dimensional vector space V, and let β be an ordered basis for V. Prove that λ is an eigenvalue of T if and only if λ is an eigenvalue of [T]β . 7.Let T be a linear operator on a finite-dimensional vector space V. We define the determinant of T, denoted det(T), as follows: Choose any ordered basis β for V, and define det(T) = det([T]β ). (a)(b)(c)(d)(e)Prove that the preceding definition is independent of the choice of an ordered basis for V. That is, prove that if β and γ are two ordered bases for V, then det([T]β ) = det([T]γ ). Prove that T is invertible if and only if det(T) = 0. Prove that if T is invertible, then det(T−1 ) = [det(T)]−1 . Prove that if U is also a linear operator on V, then det(TU) = det(T)· det(U). Prove that det(T − λIV ) = det([T]β − λI) for any scalar λ and any ordered basis β for V. 8.(a)(b)(c)Prove that a linear operator T on a finite-dimensional vector space is invertible if and only if zero is not an eigenvalue of T. Let T be an invertible linear operator. Prove that a scalar λ is an eigenvalue of T if and only if λ−1 is an eigenvalue of T−1 . State and prove results analogous to (a) and (b) for matrices. 9. Prove that the eigenvalues of an upper triangular matrix M are the diagonal entries of M . 10. Let V be a finite-dimensional vector space, and let λ be any scalar. (a)(b)(c)For any ordered basis β for V, prove that [λIV ]β = λI. Compute the characteristic polynomial of λIV . Show that λIV is diagonalizable and has only one eigenvalue. 11.A scalar matrix is a square matrix of the form λI for some scalar λ; that is, a scalar matrix is a diagonal matrix in which all the diagonal entries are equal. (a) Prove that if a square matrix A is similar to a scalar matrix λI, then A = λI. (b) Show that a diagonalizable matrix having only one eigenvalue is a scalar matrix. Sec. 5.1 Eigenvalues and Eigenvectors 1 1 (c) Prove that is not diagonalizable. 0 1 259 12. (a) Prove that similar matrices have the same characteristic polyno- mial. (b) Show that the definition of the characteristic polynomial of a linear operator on a finite-dimensional vector space V is independent of the choice of basis for V. 13. Let T be a linear operator on a finite-dimensional vector space V over a field F , let β be an ordered basis for V, and let A = [T]β . In reference to Figure 5.1, prove the following. (a) If v ∈ V and φβ (v) is an eigenvector of A corresponding to the eigenvalue λ, then v is an eigenvector of T corresponding to λ. (b) If λ is an eigenvalue of A (and hence of T), then a vector y ∈ Fn −1 is an eigenvector of A corresponding to λ if and only if φ (y) is βan eigenvector of T corresponding to λ. 14. † For any square matrix A, prove that A and At have the same charac- teristic polynomial (and hence the same eigenvalues). 15. † (a)(b)Let T be a linear operator on a vector space V, and let x be an eigenvector of T corresponding to the eigenvalue λ. For any posi- tive integer m, prove that x is an eigenvector of Tm corresponding to the eigenvalue λm . State and prove the analogous result for matrices. 16. (a) Prove that similar matrices have the same trace. Hint: Use Exer- cise 13 of Section 2.3. (b) How would you define the trace of a linear operator on a finite- dimensional vector space? Justify that your definition is well- defined. 17.Let T be the linear operator on Mn×n (R) defined by T(A) = At . (a) Show that ±1 are the only eigenvalues of T. (b) Describe the eigenvectors corresponding to each eigenvalue of T. (c) Find an ordered basis β for M2×2 (R) such that [T]β is a diagonal matrix. (d) Find an ordered basis β for Mn×n(R) such that [T]β is a diagonal matrix for n > 2. 18.Let A, B ∈ Mn×n (C). (a) Prove that if B is invertible, then there exists a scalar c ∈ C such that A + cB is not invertible. Hint: Examine det(A + cB). 260 Chap. 5 Diagonalization (b) Find nonzero 2 × 2 matrices A and B such that both A and A + cB are invertible for all c ∈ C. 19. † Let A and B be similar n × n matrices. Prove that there exists an n- dimensional vector space V, a linear operator T on V, and ordered bases β and γ for V such that A = [T]β and B = [T]γ . Hint: Use Exercise 14 of Section 2.5. 20. Let A be an n × n matrix with characteristic polynomial f (t) = (−1)n tn + an−1 tn−1 + · · · + a1 t + a0 . Prove that f (0) = a0 = det(A). Deduce that A is invertible if and only if a0 = 0. 21.Let A and f (t) be as in Exercise 20. (a)(b)Prove that f (t) = (A11 − t)(A22 − t) · · · (Ann − t) + q(t), where q(t) is a polynomial of degree at most n−2. Hint: Apply mathematical induction to n. Show that tr(A) = (−1)n−1 an−1 . †22. (a) Let T be a linear operator on a vector space V over the field F , and let g(t) be a polynomial with coefficients from F . Prove that if x is an eigenvector of T with corresponding eigenvalue λ, then g(T)(x) = g(λ)x. That is, x is an eigenvector of g(T) with corre- sponding eigenvalue g(λ). (b) State and prove a comparable result for matrices. (c) Verify (b) for the matrix A in Exercise 3(a) with polynomial g(t) = 2 2 2t − t + 1, eigenvector x = , and corresponding eigenvalue 3 λ = 4. 23.24.25.26.Use Exercise 22 to prove that if f (t) is the characteristic polynomial of a diagonalizable linear operator T, then f (T) = T0 , the zero opera- tor. (In Section 5.4 we prove that this result does not depend on the diagonalizability of T.) Use Exercise 21(a) to prove Theorem 5.3. Prove Corollaries 1 and 2 of Theorem 5.3. Determine the number of distinct characteristic polynomials of matrices in M2×2 (Z2 ). 1.Label(a)(b)(c)(d)(e)(f )(g)the following statements as true or false. Any linear operator on an n-dimensional vector space that has fewer than n distinct eigenvalues is not diagonalizable. Two distinct eigenvectors corresponding to the same eigenvalue are always linearly dependent. If λ is an eigenvalue of a linear operator T, then each vector in Eλ is an eigenvector of T. If λ1 and λ2 are distinct eigenvalues of a linear operator T, then Eλ1 ∩ Eλ2 = {0 }. Let A ∈ Mn×n (F ) and β = {v1 , v2 , . . . , vn } be an ordered basis for Fn consisting of eigenvectors of A. If Q is the n × n matrix whose jth column is vj (1 ≤ j ≤ n), then Q−1 AQ is a diagonal matrix. A linear operator T on a finite-dimensional vector space is diago- nalizable if and only if the multiplicity of each eigenvalue λ equals the dimension of Eλ . Every diagonalizable linear operator on a nonzero vector space has at least one eigenvalue. The following two items relate to the optional subsection on direct sums. (h)(i)If a vector space is the direct sum of subspaces W1 , W2 , . . . , Wk , then Wi ∩ Wj = {0 } for i = j. If k V = Wi and Wi ∩ Wj = {0 } for i = j, i=1 then V = W1 ⊕ W2 ⊕ · · · ⊕ Wk . 2. For each of the following matrices A ∈ Mn×n (R), test A for diagonal- izability, and if A is diagonalizable, find an invertible matrix Q and a diagonal matrix D such that Q−1 AQ = D. 1 2 1 3 1 4 (a) (b) (c) 0 1 3 1 3 2 ⎛ ⎞ ⎛ ⎞ ⎛ ⎞ 7 −4 0 0 0 1 1 1 0 (d) ⎝8 −5 0⎠ (e) ⎝1 0 −1⎠ (f ) ⎝0 1 2⎠ 6 −6 3 0 1 1 0 0 3 280 ⎛ ⎞ 3 1 1 (g) ⎝ 2 4 2⎠ −1 −1 1 Chap. 5 Diagonalization 3.For each of the following linear operators T on a vector space V, test T for diagonalizability, and if T is diagonalizable, find a basis β for V such that [T]β is a diagonal matrix. (a) V = P3 (R) and T is defined by T(f (x)) = f (x) + f (x), respec- tively. (b) V = P2 (R) and T is defined by T(ax2 + bx + c) = cx2 + bx + a. (c) V = R3 and T is defined by ⎛ ⎞ ⎛ ⎞ a1 a2 T ⎝a2 ⎠ = ⎝−a1 ⎠ . a3 2a3 4.5.6.7.8.9.(d) V = P2 (R) and T is defined by T(f (x)) = f (0) + f (1)(x + x2 ). (e) V = C2 and T is defined by T(z, w) = (z + iw, iz + w). (f ) V = M2×2 (R) and T is defined by T(A) = At . Prove the matrix version of the corollary to Theorem 5.5: If A ∈ Mn×n (F ) has n distinct eigenvalues, then A is diagonalizable. State and prove the matrix version of Theorem 5.6. (a) Justify the test for diagonalizability and the method for diagonal- ization stated in this section. (b) Formulate the results in (a) for matrices. For 1 4 A = ∈ M2×2 (R), 2 3 find an expression for An , where n is an arbitrary positive integer. Suppose that A ∈ Mn×n (F ) has two distinct eigenvalues, λ1 and λ2 , and that dim(Eλ1 ) = n − 1. Prove that A is diagonalizable. Let T be a linear operator on a finite-dimensional vector space V, and suppose there exists an ordered basis β for V such that [T]β is an upper triangular matrix. (a) Prove that the characteristic polynomial for T splits. (b) State and prove an analogous result for matrices. The converse of (a) is treated in Exercise 32 of Section 5.4. Sec. 5.2 Diagonalizability 281 10.11.Let T be a linear operator on a finite-dimensional vector space V with the distinct eigenvalues λ1 , λ2 , . . . , λk and corresponding multiplicities m1 , m2 , . . . , mk . Suppose that β is a basis for V such that [T]β is an upper triangular matrix. Prove that the diagonal entries of [T]β are λ1 , λ2 , . . . , λk and that each λi occurs mi times (1 ≤ i ≤ k). Let A be an n × n matrix that is similar to an upper triangular ma- trix and has the distinct eigenvalues λ1 , λ2, . . . , λk with corresponding multiplicities m1 , m2 , . . . , mk . Prove the following statements. k (a) tr(A) = mi λi i=1 (b) det(A) = (λ1 )m1 (λ2 )m2 · · · (λk )mk . 12. Let T be an invertible linear operator on a finite-dimensional vector space V. (a) Recall that for any eigenvalue λ of T, λ−1 is an eigenvalue of T−1 (Exercise 8 of Section 5.1). Prove that the eigenspace of T corre- sponding to λ is the same as the eigenspace of T−1 corresponding to λ−1 . (b) Prove that if T is diagonalizable, then T−1 is diagonalizable. 13.Let A ∈ Mn×n (F ). Recall from Exercise 14 of Section 5.1 that A and At have the same characteristic polynomial and hence share the same eigenvalues with the same multiplicities. For any eigenvalue λ of A and At , let Eλ and E λ denote the corresponding eigenspaces for A and At , respectively. (a)(b)(c)Show by way of example that for a given common eigenvalue, these two eigenspaces need not be the same. Prove that for any eigenvalue λ, dim(Eλ ) = dim(E λ ). Prove that if A is diagonalizable, then At is also diagonalizable. 14. Find the general solution to each system of differential equations. x = x + y x 1 = 8x1 + 10x2 (a) (b) y = 3x − y x2 = −5x1 − 7x2 x 1 = x1 + x 3 (c) x 2 = x2 + x 3 x 3 = 2x 3 15. Let ⎛ ⎞ a11 a12 ··· a1n ⎜ ⎜ a21 a22 ··· a2n ⎟ ⎟ A = ⎜ ⎝ . .. .. . .. . ⎟ ⎠ an1 an2 · · · ann 282 Chap. 5 Diagonalization be the coefficient matrix of the system of differential equations x 1 = a11 x1 + a12 x2 + · · · + a1n xn x 2 = a21 x1 + a22 x2 + · · · + a2n xn .. . x n = an1 x1 + an2 x2 + · · · + ann xn . Suppose that A is diagonalizable and that the distinct eigenvalues of A are λ1 , λ2 , . . . , λ k . Prove that a differentiable function x : R → Rn is a solution to the system if and only if x is of the form 16.x(t) = eλ1 t z1 + eλ2 t z2 + · · · + eλk t zk , where zi ∈ Eλi for i = 1, 2, . . . , k. Use this result to prove that the set of solutions to the system is an n-dimensional real vector space. Let C ∈ Mm×n (R), and let Y be an n × p matrix of differentiable functions. Prove (CY ) = CY , where (Y )ij = Yij for all i, j. Exercises 17 through 19 are concerned with simultaneous diagonalization. Definitions. Two linear operators T and U on a finite-dimensional vector space V are called simultaneously diagonalizable if there exists an ordered basis β for V such that both [T]β and [U]β are diagonal matrices. Similarly, A, B ∈ Mn×n (F ) are called simultaneously diagonalizable if there exists an invertible matrix Q ∈ Mn×n (F ) such that both Q−1 AQ and Q−1 BQ are diagonal matrices. 17. (a) Prove that if T and U are simultaneously diagonalizable linear operators on a finite-dimensional vector space V, then the matrices [T]β and [U]β are simultaneously diagonalizable for any ordered basis β. (b) Prove that if A and B are simultaneously diagonalizable matrices, then LA and LB are simultaneously diagonalizable linear operators. 18.(a) Prove that if T and U are simultaneously diagonalizable operators, then T and U commute (i.e., TU = UT). (b) Show that if A and B are simultaneously diagonalizable matrices, then A and B commute. The converses of (a) and (b) are established in Exercise 25 of Section 5.4. 19.Let T be a diagonalizable linear operator on a finite-dimensional vector space, and let m be any positive integer. Prove that T and Tm are simultaneously diagonalizable. Exercises 20 through 23 are concerned with direct sums. Sec. 5.3 Matrix Limits and Markov Chains 283 20.Let W1 , W2 , . . . , Wk be subspaces of a finite-dimensional vector space V such that k Wi = V. i=1 Prove that V is the direct sum of W1 , W2 , . . . , Wk if and only if k dim(V) = dim(Wi ). i=1 21.Let V be a finite-dimensional vector space with a basis β, and let β1 , β2 , . . . , βk be a partition of β (i.e., β1 , β2 , . . . , βk are subsets of β such that β = β1 ∪ β2 ∪ · · · ∪ βk and βi ∩ βj = ∅ if i = j). Prove that V = span(β1 ) ⊕ span(β2 ) ⊕ · · · ⊕ span(βk ). 22.Let T be a linear operator on a finite-dimensional vector space V, and suppose that the distinct eigenvalues of T are λ1 , λ2 , . . . , λk . Prove that span({x ∈ V : x is an eigenvector of T}) = Eλ1 ⊕ Eλ2 ⊕ · · · ⊕ Eλk . 23.Let W1 , W2, K1 , K2 , . . . , Kp , M1 , M2 , . . . , Mq be subspaces of a vector space V such that W1 = K1 ⊕K2 ⊕· · ·⊕Kp and W2 = M1 ⊕M2 ⊕· · ·⊕Mq . Prove that if W1 ∩ W2 = {0 }, then W1 + W2 = W1 ⊕ W2 = K1 ⊕ K2 ⊕ · · · ⊕ Kp ⊕ M1 ⊕ M2 ⊕ · · · ⊕ Mq . 1.Label the following statements as true or false. (a) If A ∈ Mn×n (C) and lim Am = L, then, for any invertible matrix m→∞ Q ∈ Mn×n (C), we have lim QAm Q−1 = QLQ−1 . m→∞ (b) If 2 is an eigenvalue of A ∈ Mn×n (C), then lim Am does not m→∞ exist. (c) Any vector ⎛ ⎞ x 1 ⎜ x 2 ⎟ ⎜ ⎟ ⎜ ⎝ .. . ⎟ ⎠ ∈ Rn xn (d)(e)such that x1 + x2 + · · · + xn = 1 is a probability vector. The sum of the entries of each row of a transition matrix equals 1. The product of a transition matrix and a probability vector is a probability vector. 308 Chap. 5 Diagonalization (f ) Let z be any complex number such that |z| < 1. Then the matrix ⎛ ⎞ 1 z −1 ⎝ z 1 1⎠ −1 1 z does not have 3 as an eigenvalue. (g) Every transition matrix has 1 as an eigenvalue. (h) No transition matrix can have −1 as an eigenvalue. (i) If A is a transition matrix, then lim Am exists. m→∞ (j) If A is a regular transition matrix, then lim Am exists and has m→∞ rank 1. 2. Determine whether lim Am exists for each of the following matrices m→∞ A, and compute the limit if it exists. 0.1 0.7 −1.4 0.8 0.4 0.7 (a) (b) (c) 0.7 0.1 −2.4 1.8 0.6 0.3 −1.8 4.8 −2 −1 2.0 −0.5 (d) (e) (f ) −0.8 2.2 4 3 3.0 −0.5 ⎛ ⎞ ⎛ ⎞ −1.8 0 −1.4 3.4 −0.2 0.8 (g) ⎝−5.6 1 −2.8⎠ (h) ⎝ 3.9 1.8 1.3⎠ 2.8 0 2.4 −16.5 −2.0 −4.5 ⎛ ⎞ − 1 2 − 2i 4i 1 2 + 5i ⎜ ⎟ (i) ⎝ 1 + 2i −3i −1 − 4i⎠ −1 − 2i 4i 1 + 5i ⎛ ⎞ −26 + i −28 − 4i ⎜ 3 3 28 ⎟ ⎜ ⎟ ⎜ ⎟ (j) ⎜ ⎜ ⎜ −7 3 + 2i −5 3 + i 7 − 2i ⎟ ⎟ ⎟ ⎜ ⎟ ⎜ ⎟ ⎝ + 6i + 6i 35 20i ⎠ −13 −5 −6 6 6 3. Prove that if A1 , A2 , . . . is a sequence of n × p matrices with complex entries such that lim Am = L, then lim (Am )t = Lt . m→∞ m→∞ 4. Prove that if A ∈ Mn×n (C) is diagonalizable and L = lim Am exists, m→∞ then either L = In or rank(L) < n. Sec. 5.3 Matrix Limits and Markov Chains 309 5.Find 2 × 2 matrices A and B having real entries such that lim Am , m→∞ lim B m , and lim (AB)m all exist, but m→∞ m→∞ lim (AB)m = ( lim Am )( lim B m ). m→∞ m→∞ m→∞ 6.A hospital trauma unit has determined that 30% of its patients are ambulatory and 70% are bedridden at the time of arrival at the hospital. A month after arrival, 60% of the ambulatory patients have recovered, 20% remain ambulatory, and 20% have become bedridden. After the same amount of time, 10% of the bedridden patients have recovered, 20% have become ambulatory, 50% remain bedridden, and 20% have died. Determine the percentages of patients who have recovered, are ambulatory, are bedridden, and have died 1 month after arrival. Also determine the eventual percentages of patients of each type. 7.A player begins a game of chance by placing a marker in box 2, marked Start. (See Figure 5.5.) A die is rolled, and the marker is moved one square to the left if a 1 or a 2 is rolled and one square to the right if a 3, 4, 5, or 6 is rolled. This process continues until the marker lands in square 1, in which case the player wins the game, or in square 4, in which case the player loses the game. What is the probability of winning this game? Hint: Instead of diagonalizing the appropriate transition matrix Win Start Lose 1 2 3 4 Figure 5.5 A, it is easier to represent e2 as a linear combination of eigenvectors of A and then apply An to the result. 8. Which of the following transition matrices are regular? ⎛ ⎞ ⎛ ⎞ ⎛ ⎞ 0.2 0.3 0.5 0.5 0 1 0.5 0 0 (a) ⎝0.3 0.2 0.5⎠ (b) ⎝0.5 0 0⎠ (c) ⎝0.5 0 1⎠ 0.5 0.5 0 0 1 0 0 1 0 ⎛ 1 ⎞ ⎛ ⎞ 3 0 0 ⎛ ⎞ 0.5 0 1 ⎜ 1 ⎟ 1 0 0 (d) ⎝0.5 1 0⎠ (e) ⎜ 3 1 0⎟ (f ) ⎝0 0.7 0.2⎠ ⎝ ⎠ 0 0 0 1 0 1 0 0.3 0.8 3 310 Chap. 5 Diagonalization ⎛ 1 ⎞ ⎛ 1 1 ⎞ 0 0 0 0 0 2 4 4 ⎜ 1 ⎟ ⎜ 1 1 ⎟ ⎜ 2 0 0 0⎟ ⎜ 4 4 0 0⎟ ⎜ ⎟ ⎜ ⎟ (g) ⎜ 1 1 ⎟ (h) ⎜ 1 1 ⎟ ⎜ 4 4 1 0 ⎟ ⎜ 4 4 1 0⎟ ⎝ ⎠ ⎝ ⎠ 1 1 1 1 0 1 0 1 4 4 4 4 9. Compute lim Am if it exists, for each matrix A in Exercise 8. m→∞ 10.Each of the matrices that follow is a regular transition matrix for a three-state Markov chain. In all cases, the initial probability vector is ⎛ ⎞ 0.3 P = ⎝0.3⎠ . 0.4 For each transition matrix, compute the proportions of objects in each state after two stages and the eventual proportions of objects in each state by determining the fixed probability vector. ⎛ ⎞ ⎛ ⎞ ⎛ ⎞ 0.6 0.1 0.1 0.8 0.1 0.2 0.9 0.1 0.1 (a) ⎝0.1 0.9 0.2⎠ (b) ⎝0.1 0.8 0.2⎠ (c) ⎝0.1 0.6 0.1⎠ 0.3 0 0.7 0.1 0.1 0.6 0 0.3 0.8 ⎛ ⎞ ⎛ ⎞ ⎛ ⎞ 0.4 0.2 0.2 0.5 0.3 0.2 0.6 0 0.4 (d) ⎝0.1 0.7 0.2⎠ (e) ⎝0.2 0.5 0.3⎠ (f ) ⎝0.2 0.8 0.2⎠ 0.5 0.1 0.6 0.3 0.2 0.5 0.2 0.2 0.4 11.In 1940, a county land-use survey showed that 10% of the county land was urban, 50% was unused, and 40% was agricultural. Five years later, a follow-up survey revealed that 70% of the urban land had remained urban, 10% had become unused, and 20% had become agricultural. Likewise, 20% of the unused land had become urban, 60% had remained unused, and 20% had become agricultural. Finally, the 1945 survey showed that 20% of the agricultural land had become unused while 80% remained agricultural. Assuming that the trends indicated by the 1945 survey continue, compute the percentages of urban, unused, and agricultural land in the county in 1950 and the corresponding eventual percentages. 12.A diaper liner is placed in each diaper worn by a baby. If, after a diaper change, the liner is soiled, then it is discarded and replaced by a new liner. Otherwise, the liner is washed with the diapers and reused, except that each liner is discarded and replaced after its third use (even if it has never been soiled). The probability that the baby will soil any diaper liner is one-third. If there are only new diaper liners at first, eventually what proportions of the diaper liners being used will be new, Sec. 5.3 Matrix Limits and Markov Chains 311 once used, and twice used? Hint: Assume that a diaper liner ready for use is in one of three states: new, once used, and twice used. After its use, it then transforms into one of the three states described. 13.In 1975, the automobile industry determined that 40% of American car owners drove large cars, 20% drove intermediate-sized cars, and 40% drove small cars. A second survey in 1985 showed that 70% of the large- car owners in 1975 still owned large cars in 1985, but 30% had changed to an intermediate-sized car. Of those who owned intermediate-sized cars in 1975, 10% had switched to large cars, 70% continued to drive intermediate-sized cars, and 20% had changed to small cars in 1985. Finally, of the small-car owners in 1975, 10% owned intermediate-sized cars and 90% owned small cars in 1985. Assuming that these trends continue, determine the percentages of Americans who own cars of each size in 1995 and the corresponding eventual percentages. 14. Show that if A and P are as in Example 5, then ⎛ ⎞ rm rm+1 rm+1 Am = ⎝rm+1 rm rm+1⎠ , rm+1 rm+1 rm where $ % 1 (−1)m rm = 1 + . 3 2 m−1Deduce that ⎛ ⎞ (−1)m ⎛300 ⎞ ⎜ 200 + 2m (100) ⎟ ⎜ ⎟ 600(Am P ) = Am ⎝200⎠ = ⎜ ⎜ 200 ⎟ ⎟ . ⎜ ⎟ 100 ⎝ (−1) m+1 ⎠ 200 + (100) 2m 15. Prove that if a 1-dimensional subspace W of Rn contains a nonzero vec- tor with all nonnegative entries, then W contains a unique probability vector. 16. Prove Theorem 5.15 and its corollary. 17. Prove the two corollaries of Theorem 5.18. 18. Prove the corollary of Theorem 5.19. 19. Suppose that M and M are n × n transition matrices. 312 Chap. 5 Diagonalization (a)(b)(c)Prove that if M is regular, N is any n × n transition matrix, and c is a real number such that 0 < c ≤ 1, then cM + (1 − c)N is a regular transition matrix. Suppose that for all i, j, we have that Mij > 0 whenever Mij > 0. Prove that there exists a transition matrix N and a real number c with 0 < c ≤ 1 such that M = cM + (1 − c)N . Deduce that if the nonzero entries of M and M occur in the same positions, then M is regular if and only if M is regular. The following definition is used in Exercises 20–24. Definition. For A ∈ Mn×n (C), define eA = lim Bm , where m→∞ A2 Am Bm = I + A + + ··· + 2! m! (see Exercise 22). Thus eA is the sum of the infinite series A2 A3 I + A + + + ··· , 2! 3! and Bm is the mth partial sum of this series. (Note the analogy with the power series a2 a3 ea = 1 + a + + + ··· , 2! 3! which is valid for all complex numbers a.) 20. Compute eO and eI , where O and I denote the n × n zero and identity matrices, respectively. 21. Let P −1 AP = D be a diagonal matrix. Prove that eA = P eD P −1. 22. Let A ∈ Mn×n (C) be diagonalizable. Use the result of Exercise 21 to show that eA exists. (Exercise 21 of Section 7.2 shows that eA exists for every A ∈ Mn×n (C).) 23. Find A, B ∈ M2×2 (R) such that eA eB = eA+B . 24. Prove that a differentiable function x : R → Rn is a solution to the system of differential equations defined in Exercise 15 of Section 5.2 if and only if x(t) = etA v for some v ∈ Rn , where A is defined in that exercise. 1. Label the following statements as true or false. (a) There exists a linear operator T with no T-invariant subspace. (b) If T is a linear operator on a finite-dimensional vector space V and W is a T-invariant subspace of V, then the characteristic polyno- mial of TW divides the characteristic polynomial of T. (c) Let T be a linear operator on a finite-dimensional vector space V, and let v and w be in V. If W is the T-cyclic subspace generated by v, W is the T-cyclic subspace generated by w, and W = W , then v = w. (d) If T is a linear operator on a finite-dimensional vector space V, then for any v ∈ V the T-cyclic subspace generated by v is the same as the T-cyclic subspace generated by T(v). (e) Let T be a linear operator on an n-dimensional vector space. Then there exists a polynomial g(t) of degree n such that g(T) = T0. (f ) Any polynomial of degree n with leading coefficient (−1)n is the characteristic polynomial of some linear operator. (g) If T is a linear operator on a finite-dimensional vector space V, and if V is the direct sum of k T-invariant subspaces, then there is an ordered basis β for V such that [T]β is a direct sum of k matrices. 322 Chap. 5 Diagonalization 2.For each of the following linear operators T on the vector space V, determine whether the given subspace W is a T-invariant subspace of V. (a) V = P3 (R), T(f (x)) = f (x), and W = P2 (R) (b) V = P(R), T(f (x)) = xf (x), and W = P2 (R) (c) V = R3 , T(a, b, c) = (a + b + c, a + b + c, a + b + c), and W = {(t, t, t) : t ∈ R}& 1 ' (d) V = C([0, 1]), T(f (t)) = f (x) dx t, and 0W = {f ∈ V : f (t) = at + b for some a and b} 0 1 (e) V = M2×2 (R), T(A) = A, and W = {A ∈ V : At = A} 1 0 3.Let T be a linear operator on a finite-dimensional vector space V. Prove that the following subspaces are T-invariant. (a) {0 } and V (b) N(T) and R(T) (c) Eλ , for any eigenvalue λ of T 4. Let T be a linear operator on a vector space V, and let W be a T- invariant subspace of V. Prove that W is g(T)-invariant for any poly- nomial g(t). 5.Let T be a linear operator on a vector space V. Prove that the inter- section of any collection of T-invariant subspaces of V is a T-invariant subspace of V. 6. For each linear operator T on the vector space V, find an ordered basis for the T-cyclic subspace generated by the vector z. (a) V = R4 , T(a, b, c, d) = (a + b, b − c, a + c, a + d), and z = e1 . (b) V = P3 (R), T(f (x)) = f (x), and z = x3 . 0 1 (c) V = M2×2 (R), T(A) = At , and z = . 1 0 1 1 0 1 (d) V = M2×2 (R), T(A) = A, and z = . 2 2 1 0 7.Prove that the restriction of a linear operator T to a T-invariant sub- space is a linear operator on that subspace. 8.Let T be a linear operator on a vector space with a T-invariant subspace W. Prove that if v is an eigenvector of TW with corresponding eigenvalue λ, then the same is true for T. 9.For each linear operator T and cyclic subspace W in Exercise 6, compute the characteristic polynomial of TW in two ways, as in Example 6. Sec. 5.4 Invariant Subspaces and the Cayley–Hamilton Theorem 323 10.For each linear operator in Exercise 6, find the characteristic polynomial f (t) of T, and verify that the characteristic polynomial of TW (computed in Exercise 9) divides f (t). 11.12.Let T be a linear operator on a vector space V, let v be a nonzero vector in V, and let W be the T-cyclic subspace of V generated by v. Prove that (a) W is T-invariant. (b) Any T-invariant subspace of V containing v also contains W. B1 B2 Prove that A = in the proof of Theorem 5.21. O B3 13.Let T be a linear operator on a vector space V, let v be a nonzero vector in V, and let W be the T-cyclic subspace of V generated by v. For any w ∈ V, prove that w ∈ W if and only if there exists a polynomial g(t) such that w = g(T)(v). 14.Prove that the polynomial g(t) of Exercise 13 can always be chosen so that its degree is less than or equal to dim(W). 15.Use the Cayley–Hamilton theorem (Theorem 5.23) to prove its corol- lary for matrices. Warning: If f (t) = det(A − tI) is the characteristic polynomial of A, it is tempting to “prove” that f (A) = O by saying “f (A) = det(A − AI) = det(O) = 0.” But this argument is nonsense. Why? 16. Let T be a linear operator on a finite-dimensional vector space V. (a) Prove that if the characteristic polynomial of T splits, then so does the characteristic polynomial of the restriction of T to any T-invariant subspace of V. (b) Deduce that if the characteristic polynomial of T splits, then any nontrivial T-invariant subspace of V contains an eigenvector of T. 17. Let A be an n × n matrix. Prove that dim(span({In , A, A2 , . . .})) ≤ n. 18.Let A be an n × n matrix with characteristic polynomial f (t) = (−1)ntn + an−1 tn−1 + · · · + a1 t + a0 . (a) Prove that A is invertible if and only if a0 = 0. (b) Prove that if A is invertible, then A−1 = (−1/a0 )[(−1)n An−1 + an−1 An−2 + · · · + a1 In ]. 324 Chap. 5 Diagonalization (c) Use (b) to compute A−1 for ⎛ ⎞ 1 2 1 A = ⎝0 2 3⎠ . 0 0 −1 19. Let A denote the k × k matrix ⎛ ⎞ 0 0 ··· 0 −a0 ⎜1 0 · · · 0 −a1 ⎟ ⎜ ⎟ ⎜0 1 · · · 0 −a2 ⎟ ⎜ ⎟ ⎜ ⎜ .. . .. . .. . .. . ⎟ ⎟ , ⎜ ⎟ ⎝0 0 · · · 0 −ak−2 ⎠ 0 0 ··· 1 −ak−1 where a0 , a1 , . . . , ak−1 are arbitrary scalars. Prove that the character- istic polynomial of A is (−1)k (a0 + a1 t + · · · + ak−1 tk−1 + tk ). Hint: Use mathematical induction on k, expanding the determinant along the first row. 20. Let T be a linear operator on a vector space V, and suppose that V is a T-cyclic subspace of itself. Prove that if U is a linear operator on V, then UT = TU if and only if U = g(T) for some polynomial g(t). Hint: Suppose that V is generated by v. Choose g(t) according to Exercise 13 so that g(T)(v) = U(v). 21.Let T be a linear operator on a two-dimensional vector space V. Prove that either V is a T-cyclic subspace of itself or T = cI for some scalar c. 22. Let T be a linear operator on a two-dimensional vector space V and suppose that T = cI for any scalar c. Show that if U is any linear operator on V such that UT = TU, then U = g(T) for some polynomial g(t). 23.Let T be a linear operator on a finite-dimensional vector space V, and let W be a T-invariant subspace of V. Suppose that v1 , v2 , . . . , vk are eigenvectors of T corresponding to distinct eigenvalues. Prove that if v1 + v2 + · · · + vk is in W, then vi ∈ W for all i. Hint: Use mathematical induction on k. 24. Prove that the restriction of a diagonalizable linear operator T to any nontrivial T-invariant subspace is also diagonalizable. Hint: Use the result of Exercise 23. Sec. 5.4 Invariant Subspaces and the Cayley–Hamilton Theorem 325 25. (a) Prove the converse to Exercise 18(a) of Section 5.2: If T and U are diagonalizable linear operators on a finite-dimensional vector space V such that UT = TU, then T and U are simultaneously diagonalizable. (See the definitions in the exercises of Section 5.2.) Hint: For any eigenvalue λ of T, show that Eλ is U-invariant, and apply Exercise 24 to obtain a basis for Eλ of eigenvectors of U. (b) State and prove a matrix version of (a). 26.Let T be a linear operator on an n-dimensional vector space V such that T has n distinct eigenvalues. Prove that V is a T-cyclic subspace of itself. Hint: Use Exercise 23 to find a vector v such that {v, T(v), . . . , Tn−1 (v)} is linearly independent. Exercises 27 through 32 require familiarity with quotient spaces as defined in Exercise 31 of Section 1.3. Before attempting these exercises, the reader should first review the other exercises treating quotient spaces: Exercise 35 of Section 1.6, Exercise 40 of Section 2.1, and Exercise 24 of Section 2.4. For the purposes of Exercises 27 through 32, T is a fixed linear operator on a finite-dimensional vector space V, and W is a nonzero T-invariant subspace of V. We require the following definition. Definition. Let T be a linear operator on a vector space V, and let W be a T-invariant subspace of V. Define T : V/W → V/W by T(v + W) = T(v) + W for any v + W ∈ V/W. 27. (a) Prove that T is well defined. That is, show that T(v + W) = T(v + W) whenever v + W = v + W. (b) Prove that T is a linear operator on V/W. (c) Let η : V → V/W be the linear transformation defined in Exer- cise 40 of Section 2.1 by η(v) = v + W. Show that the diagram of Figure 5.6 commutes; that is, prove that ηT = Tη. (This exercise does not require the assumption that V is finite-dimensional.) V −−−−→ T V ⏐ ⏐ ⏐ ⏐η η! ! V/W −−−−→ T V/W Figure 5.6 28.Let f (t), g(t), and h(t) be the characteristic polynomials of T, TW , and T, respectively. Prove that f (t) = g(t)h(t). Hint: Extend an ordered basis γ = {v1 , v 2 , . . . , vk } for W to an ordered basis β = {v1 , v2 , . . . , vk , vk+1 , . . . , vn } for V. Then show that the collection of 326 Chap. 5 Diagonalization cosets α = {vk+1 + W, vk+2 + W, . . . , vn + W} is an ordered basis for V/W, and prove that B1 B2 [T]β = , O B3 29.30.where B1 = [T]γ and B3 = [T]α . Use the hint in Exercise 28 to prove that if T is diagonalizable, then so is T. Prove that if both TW and T are diagonalizable and have no common eigenvalues, then T is diagonalizable. The results of Theorem 5.22 and Exercise 28 are useful in devising methods for computing characteristic polynomials without the use of determinants. This is illustrated in the next exercise. ⎛ ⎞ 1 1 −3 31. Let A = ⎝2 3 4⎠, let T = LA , and let W be the cyclic subspace 1 2 1 of R3 generated by e1 . (a)(b)(c)Use Theorem 5.22 to compute the characteristic polynomial of TW . Show that {e2 + W} is a basis for R3 /W, and use this fact to compute the characteristic polynomial of T. Use the results of (a) and (b) to find the characteristic polynomial of A. 32. Prove the converse to Exercise 9(a) of Section 5.2: If the characteristic polynomial of T splits, then there is an ordered basis β for V such that [T]β is an upper triangular matrix. Hints: Apply mathematical induction to dim(V). First prove that T has an eigenvector v, let W = span({v}), and apply the induction hypothesis to T : V/W → V/W. Exercise 35(b) of Section 1.6 is helpful here. Exercises 33 through 40 are concerned with direct sums. 33.Let T be a linear operator on a vector space V, and let W1 , W2 , . . . , Wk be T-invariant subspaces of V. Prove that W1 + W2 + · · · + Wk is also a T-invariant subspace of V. 34.Give a direct proof of Theorem 5.25 for the case k = 2. (This result is used in the proof of Theorem 5.24.) 35.Prove Theorem 5.25. Hint: Begin with Exercise 34 and extend it using mathematical induction on k, the number of subspaces. Sec. 5.4 Invariant Subspaces and the Cayley–Hamilton Theorem 327 36. Let T be a linear operator on a finite-dimensional vector space V. Prove that T is diagonalizable if and only if V is the direct sum of one-dimensional T-invariant subspaces. 37.Let T be a linear operator on a finite-dimensional vector space V, and let W1 , W2 , . . . , Wk be T-invariant subspaces of V such that V = W1 ⊕ W2 ⊕ · · · ⊕ Wk . Prove that det(T) = det(TW1 ) det(TW2 ) · · · det(TWk ). 38.Let T be a linear operator on a finite-dimensional vector space V, and let W1 , W2 , . . . , Wk be T-invariant subspaces of V such that V = W1 ⊕ W2 ⊕ · · · ⊕ Wk . Prove that T is diagonalizable if and only if TWi is diagonalizable for all i. 39. Let C be a collection of diagonalizable linear operators on a finite- dimensional vector space V. Prove that there is an ordered basis β such that [T]β is a diagonal matrix for all T ∈ C if and only if the operators of C commute under composition. (This is an extension of Exercise 25.) Hints for the case that the operators commute: The result is trivial if each operator has only one eigenvalue. Otherwise, establish the general result by mathematical induction on dim(V), using the fact that V is the direct sum of the eigenspaces of some operator in C that has more than one eigenvalue. 40.Let B1 , B2 , . . . , Bk be square matrices with entries in the same field, and let A = B1 ⊕ B2 ⊕ · · · ⊕ Bk . Prove that the characteristic polynomial of A is the product of the characteristic polynomials of the Bi ’s. 41. Let ⎛ ⎞ 1 2 ··· n ⎜ n +1 n +2 · · · 2n⎟ ⎜ ⎟ A = ⎜ ⎝ .. . .. . .. . ⎟ ⎠ . n2 − n + 1 n2 − n + 2 · · · n2 Find the characteristic polynomial of A. Hint: First prove that A has rank 2 and that span({(1, 1, . . . , 1), (1, 2, . . . , n)}) is LA -invariant. 42. Let A ∈ Mn×n (R) be the matrix defined by Aij = 1 for all i and j. Find the characteristic polynomial of A.

'Solution Manuals > Linear Algebra, 4th Edition: Friedberg' 카테고리의 다른 글

Comments