KoreanFoodie's Study

Solutions to Linear Algebra, Stephen H. Friedberg, Fourth Edition (Chapter 6) 본문

Solution Manuals/Linear Algebra, 4th Edition: Friedberg

Solutions to Linear Algebra, Stephen H. Friedberg, Fourth Edition (Chapter 6)

GoldGiver 2019. 6. 15. 13:22

Solution maual to Linear Algebra, Fourth Edition, Stephen H. Friedberg. (Chapter 6)

Solutions to Linear Algebra, Fourth Edition, Stephen H. Friedberg. (Chapter 6)

Linear Algebra solution manual, Fourth Edition, Stephen H. Friedberg. (Chapter 6)

Linear Algebra solutions Friedberg. (Chapter 6)

1.2.Label the following statements as true or false. (a)(b)(c)(d)(e)(f )(g)(h)An inner product is a scalar-valued function on the set of ordered pairs of vectors. An inner product space must be over the field of real or complex numbers. An inner product is linear in both components. There is exactly one inner product on the vector space Rn . The triangle inequality only holds in finite-dimensional inner prod- uct spaces. Only square matrices have a conjugate-transpose. If x, y, and z are vectors in an inner product space such that x, y = x, z, then y = z. If x, y = 0 for all x in an inner product space, then y = 0 . Let x = (2, 1 + i, i) and y = (2 − i, 2, 1 + 2i) be vectors in C3 . Compute x, y, x, y, and x + y. Then verify both the Cauchy–Schwarz inequality and the triangle inequality. 3.In C([0, 1]), let f (t) = t and g(t) = et . Compute f, g (as defined in Example 3), f , g, and f + g. Then verify both the Cauchy– Schwarz inequality and the triangle inequality. 4. (a) Complete the proof in Example 5 that · , · is an inner product (the Frobenius inner product) on Mn×n (F ). (b) Use the Frobenius inner product to compute A, B, and A, B for 1 2+ i 1+ i 0 A = and B = . 3 i i −i 5.In C2 , show that x, y = xAy∗ is an inner product, where 1 i A = . −i 2 Compute x, y for x = (1 − i, 2 + 3i) and y = (2 + i, 3 − 2i). Sec. 6.1 Inner Products and Norms 337 6. Complete the proof of Theorem 6.1. 7. Complete the proof of Theorem 6.2. 8. Provide reasons why each of the following is not an inner product on the given vector spaces. (a) (a, b), (c, d) = ac − bd on R2 . (b) A, B = tr(A + B) on M2×2 (R). 1 (c) f (x), g(x) = 0 f (t)g(t) dt on P(R), where denotes differentia- tion. 9. Let β be a basis for a finite-dimensional inner product space. (a) Prove that if x, z = 0 for all z ∈ β, then x = 0 . (b) Prove that if x, z = y, z for all z ∈ β, then x = y. 10. † Let V be an inner product space, and suppose that x and y are orthog- onal vectors in V. Prove that x + y2 = x2 + y2 . Deduce the Pythagorean theorem in R2 . 11. Prove the parallelogram law on an inner product space V; that is, show that x + y2 + x − y2 = 2x2 + 2y2 for all x, y ∈ V. What does this equation state about parallelograms in R2 ? 12. † Let {v1 , v2 , . . . , vk } be an orthogonal set in V, and let a1 , a2 , . . . , ak be scalars. Prove that 5 52 5 5 k 5 5 k 5 ai vi 5 = |ai |2 vi 2 . 5 5 i=1 i=1 13.14.Suppose that · , · 1 and · , · 2 are two inner products on a vector space V. Prove that · , · = · , · 1 + · , · 2 is another inner product on V. Let A and B be n × n matrices, and let c be a scalar. Prove that (A + cB)∗ = A∗ + cB ∗ . 15.(a)Prove that if V is an inner product space, then | x, y | = x· y if and only if one of the vectors x or y is a multiple of the other. Hint: If the identity holds and y = 0 , let x, y a = , y2 338 Chap. 6 Inner Product Spaces 16.(b)(a)(b)and let z = x − ay. Prove that y and z are orthogonal and x |a| = . y Then apply Exercise 10 to x2 = ay + z2 to obtain z = 0. Derive a similar result for the equality x + y = x + y, and generalize it to the case of n vectors. Show that the vector space H with · , · defined on page 332 is an inner product space. Let V = C([0, 1]), and define

1/2 f, g = f (t)g(t) dt. 0 Is this an inner product on V? 17.Let T be a linear operator on an inner product space V, and suppose that T(x) = x for all x. Prove that T is one-to-one. 18.Let V be a vector space over F , where F = R or F = C, and let W be an inner product space over F with inner product · , · . If T : V → W is linear, prove that x, y = T(x), T(y) defines an inner product on V if and only if T is one-to-one. 19.Let V be an inner product space. Prove that (a) x ± y2 = x2 ± 2 x, y + y2 for all x, y ∈ V, where x, y denotes the real part of the complex number x, y. (b) | x − y | ≤ x − y for all x, y ∈ V. 20.21.Let V be an inner product space over F . Prove the polar identities: For all x, y ∈ V, (a) x, y = 14 x + y2 − 14 x − y2 if F = R; ,4 (b) x, y = 14 k=1 ik x + ik y2 if F = C, where i2 = −1. Let A be an n × n matrix. Define 1 1 A1 = (A + A∗ ) and A2 = (A − A∗ ). 2 2i (a) Prove that A∗ 1 = A1 , A∗ 2 = A2 , and A = A1 + iA2 . Would it be reasonable to define A1 and A2 to be the real and imaginary parts, respectively, of the matrix A? (b) Let A be an n × n matrix. Prove that the representation in (a) is ∗unique. That is, prove that if A = B1 + iB2 , where B1 = B1 and B2 ∗ = B2 , then B1 = A1 and B2 = A2 . Sec. 6.1 Inner Products and Norms 339 22.Let V be a real or complex vector space (possibly infinite-dimensional), and let β be a basis for V. For x, y ∈ V there exist v1 , v2 , . . . , vn ∈ β such that n n x = ai vi and y = bi vi . i=1 i=1 Define n x, y = ai bi . i=1 (a) Prove that · , · is an inner product on V and that β is an or- thonormal basis for V. Thus every real or complex vector space may be regarded as an inner product space. (b) Prove that if V = Rn or V = Cn and β is the standard ordered basis, then the inner product defined above is the standard inner product. 23.Let V = Fn , and let A ∈ Mn×n (F ). (a) Prove that x, Ay = A∗ x, y for all x, y ∈ V. (b) Suppose that for some B ∈ Mn×n (F ), we have x, Ay = Bx, y for all x, y ∈ V. Prove that B = A∗ . (c) Let α be the standard ordered basis for V. For any orthonormal basis β for V, let Q be the n × n matrix whose columns are the vectors in β. Prove that Q∗ = Q−1 . (d) Define linear operators T and U on V by T(x) = Ax and U(x) = A∗ x. Show that [U]β = [T]∗ β for any orthonormal basis β for V. The following definition is used in Exercises 24–27. Definition. Let V be a vector space over F , where F is either R or C. Regardless of whether V is or is not an inner product space, we may still define a norm · as a real-valued function on V satisfying the following three conditions for all x, y ∈ V and a ∈ F : (1) x ≥ 0, and x = 0 if and only if x = 0 . (2) ax = |a|· x. (3) x + y ≤ x + y. 24. Prove that the following are norms on the given vector spaces V. (a) V = Mm×n (F ); A = max |Aij | for all A ∈ V i,j (b) V = C([0, 1]); f = max |f (t)| for all f ∈ V t∈[0,1] 340

1 (c) V = C([0, 1]); f = |f (t)| dt 0 (d) V = R2 ; (a, b) = max{|a|, |b|} Chap. 6 Inner Product Spaces for all f ∈ V for all (a, b) ∈ V 25. Use Exercise 20 to show that there is no inner product · , · on R2 such that x2 = x, x for all x ∈ R2 if the norm is defined as in Exercise 24(d). 26.Let · be a norm on a vector space V, and define, for each ordered pair of vectors, the scalar d(x, y) = x − y, called the distance between x and y. Prove the following results for all x, y, z ∈ V. (a) (b) (c) (d) (e) d(x, y) ≥ 0. d(x, y) = d(y, x). d(x, y) ≤ d(x, z) + d(z, y). d(x, x) = 0. d(x, y) = 0 if x = y. 27.Let · be a norm on a real vector space V satisfying the parallelogram law given in Exercise 11. Define 1 6 7 x, y = x + y2 − x − y2 . 4 Prove that · , · defines an inner product on V such that x2 = x, x for all x ∈ V. Hints: (a)(b)(c)(d)(e)(f )(g)Prove x, 2y = 2 x, y for all x, y ∈ V. Prove x + u, y = x, y + u, y for all x, u, y ∈ V. Prove nx, y = n x, y for every positive integer n and every x, y ∈ V. 8 9 Prove m m 1 x, y = x, y for every positive integer m and every x, y ∈ V. Prove rx, y = r x, y for every rational number r and every x, y ∈ V. Prove | x, y | ≤ xy for every x, y ∈ V. Hint: Condition (3) in the definition of norm can be helpful. Prove that for every c ∈ R, every rational number r, and every x, y ∈ V, |c x, y − cx, y | = |(c−r) x, y − (c−r)x, y | ≤ 2|c−r|xy. (h) Use the fact that for any c ∈ R, |c − r| can be made arbitrarily small, where r varies over the set of rational numbers, to establish item (b) of the definition of inner product. Sec. 6.2 Gram-Schmidt Orthogonalization Process 341 28.29.Let V be a complex inner product space with an inner product · , · . Let [ · , · ] be the real-valued function such that [x, y] is the real part of the complex number x, y for all x, y ∈ V. Prove that [· , · ] is an inner product for V, where V is regarded as a vector space over R. Prove, furthermore, that [x, ix] = 0 for all x ∈ V. Let V be a vector space over C, and suppose that [· , · ] is a real inner product on V, where V is regarded as a vector space over R, such that [x, ix] = 0 for all x ∈ V. Let · , · be the complex-valued function defined by x, y = [x, y] + i[x, iy] for x, y ∈ V. 30.Prove that · , · is a complex inner product on V. Let · be a norm (as defined in Exercise 24) on a complex vector space V satisfying the parallelogram law given in Exercise 11. Prove that there is an inner product · , · on V such that x2 = x, x for all x ∈ V. Hint: Apply Exercise 27 to V regarded as a vector space over R. Then apply Exercise 29. 1. Label the following statements as true or false. (a) The Gram–Schmidt orthogonalization process allows us to con- struct an orthonormal set from an arbitrary set of vectors. Sec. 6.2 Gram-Schmidt Orthogonalization Process 353 (b)(c)(d)(e)(f )(g)Every nonzero finite-dimensional inner product space has an or- thonormal basis. The orthogonal complement of any set is a subspace. If {v1 , v2 , . . . , vn } is a basis for an inner product space V, then for any x ∈ V the scalars x, vi are the Fourier coefficients of x. An orthonormal basis must be an ordered basis. Every orthogonal set is linearly independent. Every orthonormal set is linearly independent. 2.In each part, apply the Gram–Schmidt process to the given subset S of the inner product space V to obtain an orthogonal basis for span(S). Then normalize the vectors in this basis to obtain an orthonormal basis β for span(S), and compute the Fourier coefficients of the given vector relative to β. Finally, use Theorem 6.5 to verify your result. (a) V = R3 , S = {(1, 0, 1), (0, 1, 1), (1, 3, 3)}, and x = (1, 1, 2) (b) V = R3 , S = {(1, 1, 1), (0, 1, 1), (0, 0, 1)}, and x = (1, 0, 1) 1 (c) V = P2 (R) with the inner product f (x), g(x) = 0 f (t)g(t) dt, S = {1, x, x2 }, and h(x) = 1 + x (d) V = span(S), where S = {(1, i, 0), (1 − i, 2, 4i)}, and x = (3 + i, 4i, −4) (e) V = R4 , S = {(2, −1, −2, 4), (−2, 1, −5, 5), (−1, 3, 7, 11)}, and x = (−11, 8, −4, 18) (f ) V = R4 , S = {(1, −2, −1, 3), (3, 6, 3, −1), (1, 4, 2, 8)}, and x = (−1, 2, 1, 1) 3 5 −1 9 7 −17 (g) V = M2×2 (R), S = , , , and −1 1 5 −1 2 −6 −1 27 A = −4 8 2 2 11 4 4 −12 (h) V = M2×2 (R), S = , , , and A = 2 1 2 5 3 −16 8 6 25 −13

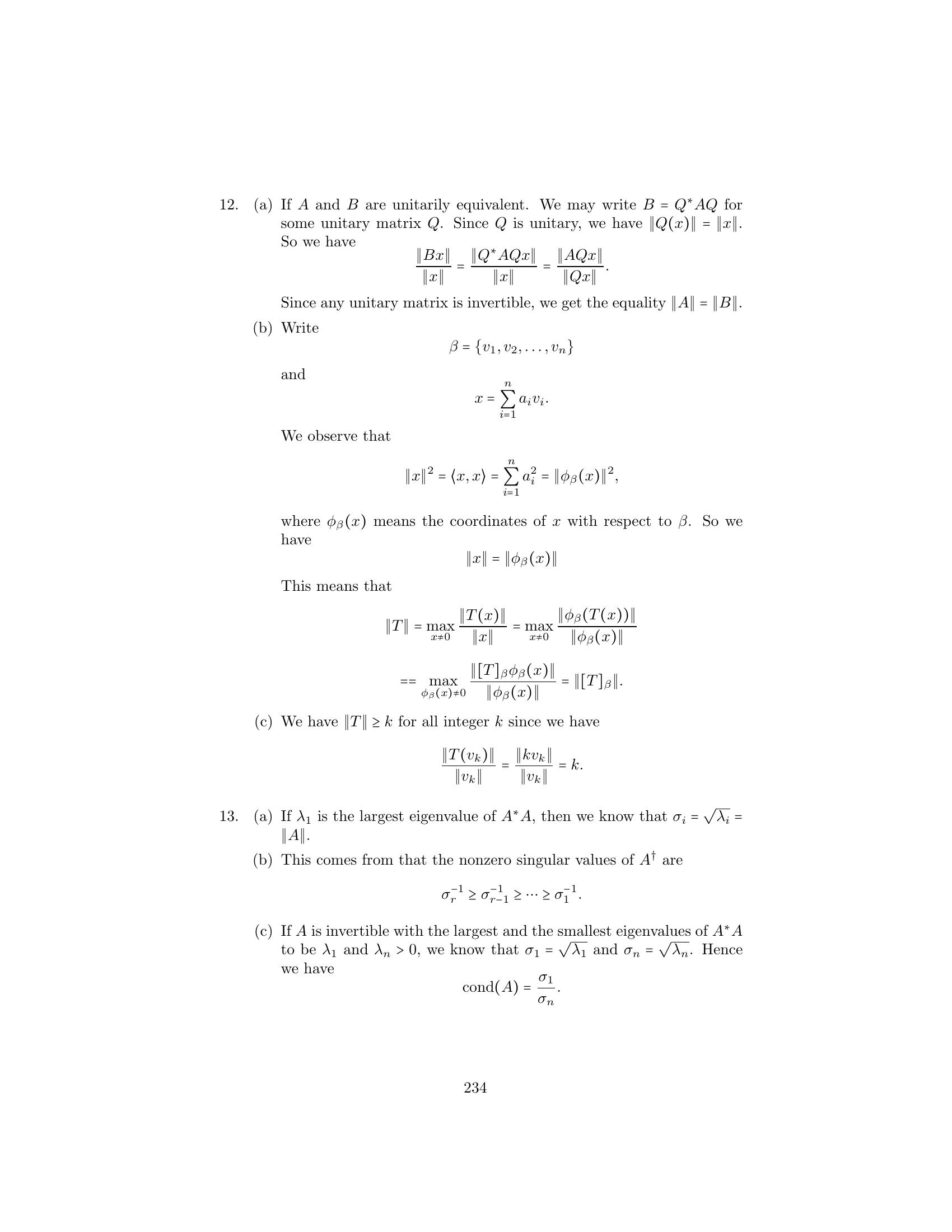

π (i) V = span(S) with the inner product f, g = f (t)g(t) dt, 0 S = {sin t, cos t, 1, t}, and h(t) = 2t + 1 (j) V = C4, S = {(1, i, 2 − i, −1), (2 + 3i, 3i, 1 − i, 2i), (−1+7i, 6+10i, 11−4i, 3+4i)}, and x = (−2+7i, 6+9i, 9−3i, 4+4i) (k) V = C4, S = {(−4, 3 − 2i, i, 1 − 4i), (−1−5i, 5−4i, −3+5i, 7−2i), (−27−i, −7−6i, −15+25i, −7−6i)}, and x = (−13 − 7i, −12 + 3i, −39 − 11i, −26 + 5i) 354 Chap. 6 Inner Product Spaces 1 − i −2 − 3i 8i 4 (l) V = M2×2 (C), S = , , 2 + 2i 4+ i −3 − 3i −4 + 4i −25 − 38i −2 − 13i −2 + 8i −13 + i , and A = 12 − 78i −7 + 24i 10 − 10i 9 − 9i −1 + i −i −1 − 7i −9 − 8i (m) V = M2×2 (C), S = , , 2 − i 1 + 3i 1 + 10i −6 − 2i −11 − 132i −34 − 31i −7 + 5i 3 + 18i , and A = 7 − 126i −71 − 5i 9 − 6i −3 + 7i 3. In R2 , let 1 1 1 −1 β = √ , √ , √ , √ . 2 2 2 2 4.5.Find the Fourier coefficients of (3, 4) relative to β. Let S = {(1, 0, i), (1, 2, 1)} in C3 . Compute S ⊥. Let S0 = {x0 }, where x0 is a nonzero vector in R3. Describe S0 ⊥ ge- ometrically. Now suppose that S = {x1 , x2 } is a linearly independent subset of R3 . Describe S ⊥ geometrically. 6. Let V be an inner product space, and let W be a finite-dimensional subspace of V. If x ∈ / W, prove that there exists y ∈ V such that y ∈ W⊥ , but x, y = 0. Hint: Use Theorem 6.6. 7.8.9.Let β be a basis for a subspace W of an inner product space V, and let z ∈ V. Prove that z ∈ W⊥ if and only if z, v = 0 for every v ∈ β. Prove that if {w1 , w2 , . . . , wn} is an orthogonal set of nonzero vectors, then the vectors v1 , v2 , . . . , vn derived from the Gram–Schmidt process satisfy vi = wi for i = 1, 2, . . . , n. Hint: Use mathematical induction. Let W = span({(i, 0, 1)}) in C3 . Find orthonormal bases for W and W⊥ . 10. Let W be a finite-dimensional subspace of an inner product space V. Prove that there exists a projection T on W along W⊥ that satisfies N(T) = W⊥ . In addition, prove that T(x) ≤ x for all x ∈ V. Hint: Use Theorem 6.6 and Exercise 10 of Section 6.1. (Projections are defined in the exercises of Section 2.1.) 11.12.Let A be an n × n matrix with complex entries. Prove that AA∗ = I if and only if the rows of A form an orthonormal basis for Cn . Prove that for any matrix A ∈ Mm×n (F ), (R(LA∗ ))⊥ = N(LA ). Sec. 6.2 Gram-Schmidt Orthogonalization Process 355 13.Let V be an inner product space, S and S0 be subsets of V, and W be a finite-dimensional subspace of V. Prove the following results. (a) S0 ⊆ S implies that S ⊥ ⊆ S0 ⊥ . (b) S ⊆ (S ⊥ )⊥ ; so span(S) ⊆ (S ⊥)⊥ . (c) W = (W⊥ )⊥ . Hint: Use Exercise 6. (d) V = W ⊕ W⊥ . (See the exercises of Section 1.3.) 14.Let W1 and W2 be subspaces of a finite-dimensional inner product space. Prove that (W1 +W2 )⊥ = W1 ⊥ ∩W2 ⊥ and (W1 ∩W2 )⊥ = W1 ⊥ +W2 ⊥ . (See the definition of the sum of subsets of a vector space on page 22.) Hint for the second equation: Apply Exercise 13(c) to the first equation. 15.Let V be a finite-dimensional inner product space over F . (a) Parseval’s Identity. Let {v1 , v2 , . . . , vn } be an orthonormal basis for V. For any x, y ∈ V prove that n x, y = x, vi y, vi . i=1 (b)Use (a) to prove that if β is an orthonormal basis for V with inner product · , · , then for any x, y ∈ V φβ (x), φβ (y) = [x]β , [y]β = x, y , where · , · is the standard inner product on Fn . 16.(a)Bessel’s Inequality. Let V be an inner product space, and let S = {v1, v2 , . . . , vn } be an orthonormal subset of V. Prove that for any x ∈ V we have n x2 ≥ | x, vi |2 . i=1 Hint: Apply Theorem 6.6 to x ∈ V and W = span(S). Then use Exercise 10 of Section 6.1. (b) In the context of (a), prove that Bessel’s inequality is an equality if and only if x ∈ span(S). 17.Let T be a linear operator on an inner product space V. If T(x), y = 0 for all x, y ∈ V, prove that T = T0 . In fact, prove this result if the equality holds for all x and y in some basis for V. 18.Let V = C([−1, 1]). Suppose that We and Wo denote the subspaces of V consisting of the even and odd functions, respectively. (See Exercise 22 356 Chap. 6 Inner Product Spaces ⊥of Section 1.3.) Prove that We = Wo , where the inner product on V is defined by

1 f, g = f (t)g(t) dt. −1 19.In each of the following parts, find the orthogonal projection of the given vector on the given subspace W of the inner product space V. (a) V = R2 , u = (2, 6), and W = {(x, y) : y = 4x}. (b) V = R3 , u = (2, 1, 3), and W = {(x, y, z) : x + 3y − 2z = 0}. 1 (c) V = P(R) with the inner product f (x), g(x) = 0 f (t)g(t) dt, h(x) = 4 + 3x − 2x2 , and W = P1 (R). 20. In each part of Exercise 19, find the distance from the given vector to the subspace W. 1 21. Let V = C([−1, 1]) with the inner product f, g = −1 f (t)g(t) dt, and let W be the subspace P2 (R), viewed as a space of functions. Use the orthonormal basis obtained in Example 5 to compute the “best” (closest) second-degree polynomial approximation of the function h(t) = et on the interval [−1, 1]. 1 22. Let V = C([0, 1]) with the inner product f, g = 0 f (t)g(t) dt. Let W √ be the subspace spanned by the linearly independent set {t, t}. (a) Find an orthonormal basis for W. (b) Let h(t) = t2 . Use the orthonormal basis obtained in (a) to obtain the “best” (closest) approximation of h in W. 23. Let V be the vector space defined in Example 5 of Section 1.2, the space of all sequences σ in F (where F = R or F = C) such that σ(n) = 0 for only finitely many positive integers n. For σ, μ ∈ V, we ∞ define σ, μ = σ(n)μ(n). Since all but a finite number of terms of n=1 the series are zero, the series converges. (a)(b)(c)Prove that · , · is an inner product on V, and hence V is an inner product space. For each positive integer n, let en be the sequence defined by en (k) = δn,k , where δn,k is the Kronecker delta. Prove that {e1 , e2, . . .} is an orthonormal basis for V. Let σn = e1 + en and W = span({σn : n ≥ 2}. (i) Prove that e1 ∈ / W, so W = V. (ii) Prove that W ⊥ = {0 }, and conclude that W = (W⊥ )⊥ . Sec. 6.3 The Adjoint of a Linear Operator 357 Thus the assumption in Exercise 13(c) that W is finite-dimensional is essential. 1.Label the following statements as true or false. Assume that the under- lying inner product spaces are finite-dimensional. (a)(b)(c)(d)(e)Every linear operator has an adjoint. Every linear operator on V has the form x → x, y for some y ∈ V. For every linear operator T on V and every ordered basis β for V, we have [T∗ ]β = ([T]β )∗ . The adjoint of a linear operator is unique. For any linear operators T and U and scalars a and b, (aT + bU)∗ = aT∗ + bU∗ . (f )(g)For any n × n matrix A, we have (LA )∗ = LA∗ . For any linear operator T, we have (T∗ )∗ = T. 2.For each of the following inner product spaces V (over F ) and linear transformations g : V → F , find a vector y such that g(x) = x, y for all x ∈ V. 366 Chap. 6 Inner Product Spaces 3.(a) V = R3 , g(a1 , a2 , a3 ) = a1 − 2a2 + 4a3 (b) V = C2 , g(z1 , z2 ) = z1 − 2z2

1 (c) V = P2 (R) with f, h = f (t)h(t) dt, g(f ) = f (0) + f (1) 0 For each of the following inner product spaces V and linear operators T on V, evaluate T∗ at the given vector in V. (a) V = R2 , T(a, b) = (2a + b, a − 3b), x = (3, 5). (b) V = C2 , T(z1 , z2 ) = (2z1 + iz2 , (1 − i)z1 ), x = (3 − i, 1 + 2i).

1 (c) V = P1 (R) with f, g = f (t)g(t) dt, T(f ) = f + 3f , −1 f (t) = 4 − 2t 4. Complete the proof of Theorem 6.11. 5. (a) Complete the proof of the corollary to Theorem 6.11 by using Theorem 6.11, as in the proof of (c). (b) State a result for nonsquare matrices that is analogous to the corol- lary to Theorem 6.11, and prove it using a matrix argument. 6.Let T be a linear operator on an inner product space V. Let U1 = T+T∗ and U2 = TT∗ . Prove that U1 = U∗ 1 and U2 = U∗ 2 . 7.Give an example of a linear operator T on an inner product space V such that N(T) = N(T∗ ). 8.9.Let V be a finite-dimensional inner product space, and let T be a linear operator on V. Prove that if T is invertible, then T∗ is invertible and (T∗ )−1 = (T−1 )∗ . Prove that if V = W ⊕ W⊥ and T is the projection on W along W⊥ , then T = T∗ . Hint: Recall that N(T) = W⊥ . (For definitions, see the exercises of Sections 1.3 and 2.1.) 10.11.Let T be a linear operator on an inner product space V. Prove that T(x) = x for all x ∈ V if and only if T(x), T(y) = x, y for all x, y ∈ V. Hint: Use Exercise 20 of Section 6.1. For a linear operator T on an inner product space V, prove that T∗ T = T0 implies T = T0 . Is the same result true if we assume that TT∗ = T0 ? 12.Let V be an inner product space, and let T be a linear operator on V. Prove the following results. (a) R(T∗ )⊥ = N(T). (b) If V is finite-dimensional, then R(T∗ ) = N(T)⊥ . Hint: Use Exer- cise 13(c) of Section 6.2. Sec. 6.3 The Adjoint of a Linear Operator 367 13.Let T be a linear operator on a finite-dimensional vector space V. Prove the following results. (a) N(T∗ T) = N(T). Deduce that rank(T∗ T) = rank(T). (b) rank(T) = rank(T∗ ). Deduce from (a) that rank(TT∗ ) = rank(T). (c) For any n × n matrix A, rank(A∗ A) = rank(AA∗ ) = rank(A). 14.Let V be an inner product space, and let y, z ∈ V. Define T : V → V by T(x) = x, yz for all x ∈ V. First prove that T is linear. Then show that T∗ exists, and find an explicit expression for it. The following definition is used in Exercises 15–17 and is an extension of the definition of the adjoint of a linear operator. Definition. Let T : V → W be a linear transformation, where V and W are finite-dimensional inner product spaces with inner products · , · 1 and · , · 2 , respectively. A function T∗ : W → V is called an adjoint of T if T(x), y2 = x, T ∗ (y)1 for all x ∈ V and y ∈ W. 15.Let T : V → W be a linear transformation, where V and W are finite- dimensional inner product spaces with inner products · , · 1 and · , · 2 , respectively. Prove the following results. (a)(b)(c)(d)(e)There is a unique adjoint T∗ of T, and T∗ is linear. If β and γ are orthonormal bases for V and W, respectively, then [T∗ ]βγ = ([T]γβ )∗ . rank(T∗ ) = rank(T). T∗ (x), y1 = x, T(y)2 for all x ∈ W and y ∈ V. For all x ∈ V, T∗ T(x) = 0 if and only if T(x) = 0 . 16. State and prove a result that extends the first four parts of Theorem 6.11 using the preceding definition. 17. Let T : V → W be a linear transformation, where V and W are finite- dimensional inner product spaces. Prove that (R(T∗ ))⊥ = N(T), using the preceding definition. 18. † Let A be an n × n matrix. Prove that det(A∗ ) = det(A). 19.Suppose that A is an m×n matrix in which no two columns are identical. Prove that A∗ A is a diagonal matrix if and only if every pair of columns of A is orthogonal. 20.For each of the sets of data that follows, use the least squares approx- imation to find the best fits with both (i) a linear function and (ii) a quadratic function. Compute the error E in both cases. (a) {(−3, 9), (−2, 6), (0, 2), (1, 1)} 368 Chap. 6 Inner Product Spaces (b)(c){(1, 2), (3, 4), (5, 7), (7, 9), (9, 12)} {(−2, 4), (−1, 3), (0, 1), (1, −1), (2, −3)} 21. In physics, Hooke’s law states that (within certain limits) there is a linear relationship between the length x of a spring and the force y applied to (or exerted by) the spring. That is, y = cx + d, where c is called the spring constant. Use the following data to estimate the spring constant (the length is given in inches and the force is given in pounds). Length x 3.5 4.0 4.5 5.0 Force y 1.0 2.2 2.8 4.3 22.Find the minimal solution to each of the following systems of linear equations. x + 2y − z = 1 (a) x + 2y − z = 12 (b) 2x + 3y + z = 2 4x + 7y − z = 4 x + y − z =0 x + y + z − w =1 (c) 2x − y + z = 3 (d) 2x − y + w =1 x − y + z =2 23. Consider the problem of finding the least squares line y = ct + d corre- sponding to the m observations (t1 , y1 ), (t2 , y2 ), . . . , (tm , ym ). (a) Show that the equation (A∗ A)x0 = A∗ y of Theorem 6.12 takes the form of the normal equations: m m m t2 i c + ti d = tiyi i=1 i=1 i=1 and m m ti c + md = yi . i=1 i=1 These equations may also be obtained from the error E by setting the partial derivatives of E with respect to both c and d equal to zero. Sec. 6.4 Normal and Self-Adjoint Operators 369 (b) Use the second normal equation of (a) to show that the least squares line must pass through the center of mass, (t, y), where 1 m 1 m t = ti and y = yi . m mi=1 i=1 24. Let V and {e1 , e2 , . . .} be defined as in Exercise 23 of Section 6.2. Define T : V → V by ∞ T(σ)(k) = σ(i) for every positive integer k. i=k Notice that the infinite series in the definition of T converges because σ(i) = 0 for only finitely many i. (a) Prove that T is a linear operator on V. ,n (b) Prove that for any positive integer n, T(en ) = i=1 ei . (c) Prove that T has no adjoint. Hint: By way of contradiction, suppose that T∗ exists. Prove that for any positive integer n, T∗ (en )(k) = 0 for infinitely many k. 1.Label the following statements as true or false. Assume that the under- lying inner product spaces are finite-dimensional. (a)(b)(c)(d)(e)Every self-adjoint operator is normal. Operators and their adjoints have the same eigenvectors. If T is an operator on an inner product space V, then T is normal if and only if [T]β is normal, where β is any ordered basis for V. A real or complex matrix A is normal if and only if LA is normal. The eigenvalues of a self-adjoint operator must all be real. Sec. 6.4 Normal and Self-Adjoint Operators 375 (f )(g)(h)The identity and zero operators are self-adjoint. Every normal operator is diagonalizable. Every self-adjoint operator is diagonalizable. 2.For each linear operator T on an inner product space V, determine whether T is normal, self-adjoint, or neither. If possible, produce an orthonormal basis of eigenvectors of T for V and list the corresponding eigenvalues. (a) (b) (c) (d) V = R2 and T is defined by T(a, b) = (2a − 2b, −2a + 5b). V = R3 and T is defined by T(a, b, c) = (−a + b, 5b, 4a − 2b + 5c). V = C2 and T is defined by T(a, b) = (2a + ib, a + 2b). V = P2(R) and T is defined by T(f ) = f , where

1 f, g = f (t)g(t) dt. 0 (e) V = M2×2 (R) and T is defined by T(A) = At . a b c d (f ) V = M2×2 (R) and T is defined by T = . c d a b 3.Give an example of a linear operator T on R2 and an ordered basis for R2 that provides a counterexample to the statement in Exercise 1(c). 4.5.Let T and U be self-adjoint operators on an inner product space V. Prove that TU is self-adjoint if and only if TU = UT. Prove (b) of Theorem 6.15. 6.Let V be a complex inner product space, and let T be a linear operator on V. Define 1 1 T1 = (T + T∗ ) and T2 = (T − T∗ ). 2 2i (a)(b)(c)Prove that T1 and T2 are self-adjoint and that T = T1 + i T2 . Suppose also that T = U1 + iU2 , where U1 and U2 are self-adjoint. Prove that U1 = T1 and U2 = T2 . Prove that T is normal if and only if T1 T2 = T2 T1 . 7.Let T be a linear operator on an inner product space V, and let W be a T-invariant subspace of V. Prove the following results. (a) If T is self-adjoint, then TW is self-adjoint. (b) W⊥ is T∗ -invariant. (c) If W is both T- and T∗ -invariant, then (TW )∗ = (T∗ )W . (d) If W is both T- and T∗ -invariant and T is normal, then TW is normal. 376 Chap. 6 Inner Product Spaces 8. Let T be a normal operator on a finite-dimensional complex inner product space V, and let W be a subspace of V. Prove that if W is T-invariant, then W is also T∗ -invariant. Hint: Use Exercise 24 of Sec- tion 5.4. 9.Let T be a normal operator on a finite-dimensional inner product space V. Prove that N(T) = N(T∗ ) and R(T) = R(T∗). Hint: Use Theo- rem 6.15 and Exercise 12 of Section 6.3. 10.Let T be a self-adjoint operator on a finite-dimensional inner product space V. Prove that for all x ∈ V T(x) ± ix2 = T(x)2 + x2 . Deduce that T − iI is invertible and that [(T − iI)−1 ]∗ = (T + iI)−1 . 11.Assume that T is a linear operator on a complex (not necessarily finite- dimensional) inner product space V with an adjoint T∗ . Prove the following results. (a) If T is self-adjoint, then T(x), x is real for all x ∈ V. (b) If T satisfies T(x), x = 0 for all x ∈ V, then T = T0 . Hint: Replace x by x + y and then by x + iy, and expand the resulting inner products. (c) If T(x), x is real for all x ∈ V, then T = T∗ . 12. Let T be a normal operator on a finite-dimensional real inner product space V whose characteristic polynomial splits. Prove that V has an orthonormal basis of eigenvectors of T. Hence prove that T is self- adjoint. 13.An n × n real matrix A is said to be a Gramian matrix if there exists a real (square) matrix B such that A = B t B. Prove that A is a Gramian matrix if and only if A is symmetric and all of its eigenvalues are non- negative. Hint: Apply Theorem 6.17 to T = LA to obtain an orthonor- mal basis {v1 , v2 , . . . , vn } of eigenvectors with the associated eigenvalues √λ1 , λ2 , . . . , λn. Define the linear operator U by U(vi ) = λi vi . 14.Simultaneous Diagonalization. Let V be a finite-dimensional real inner product space, and let U and T be self-adjoint linear operators on V such that UT = TU. Prove that there exists an orthonormal basis for V consisting of vectors that are eigenvectors of both U and T. (The complex version of this result appears as Exercise 10 of Section 6.6.) Hint: For any eigenspace W = Eλ of T, we have that W is both T- and U-invariant. By Exercise 7, we have that W⊥ is both T- and U-invariant. Apply Theorem 6.17 and Theorem 6.6 (p. 350). Sec. 6.4 Normal and Self-Adjoint Operators 377 15.Let A and B be symmetric n × n matrices such that AB = BA. Use Exercise 14 to prove that there exists an orthogonal matrix P such that P t AP and P t BP are both diagonal matrices. 16.Prove the Cayley–Hamilton theorem for a complex n×n matrix A. That is, if f (t) is the characteristic polynomial of A, prove that f (A) = O. Hint: Use Schur’s theorem to show that A may be assumed to be upper triangular, in which case n f (t) = (Aii − t). i=1 Now if T = LA , we have (Ajj I − T)(ej ) ∈ span({e1 , e2 , . . . , ej−1 }) for j ≥ 2, where {e1 , e2 , . . . , en } is the standard ordered basis for Cn . (The general case is proved in Section 5.4.) The following definitions are used in Exercises 17 through 23. Definitions. A linear operator T on a finite-dimensional inner product space is called positive definite [positive semidefinite] if T is self-adjoint and T(x), x > 0 [T(x), x ≥ 0] for all x = 0 . An n × n matrix A with entries from R or C is called positive definite [positive semidefinite] if LA is positive definite [positive semidefinite]. 17.Let T and U be a self-adjoint linear operators on an n-dimensional inner product space V, and let A = [T]β , where β is an orthonormal basis for V. Prove the following results. (a)(b)T is positive definite [semidefinite] if and only if all of its eigenval- ues are positive [nonnegative]. T is positive definite if and only if Aij aj ai > 0 for all nonzero n-tuples (a1 , a 2 , . . . , an). i,j (c)(d)(e)(f )T is positive semidefinite if and only if A = B ∗ B for some square matrix B. If T and U are positive semidefinite operators such that T2 = U2 , then T = U. If T and U are positive definite operators such that TU = UT, then TU is positive definite. T is positive definite [semidefinite] if and only if A is positive def- inite [semidefinite]. Because of (f), results analogous to items (a) through (d) hold for ma- trices as well as operators. 378 Chap. 6 Inner Product Spaces 18. Let T : V → W be a linear transformation, where V and W are finite- dimensional inner product spaces. Prove the following results. (a) T∗ T and TT∗ are positive semidefinite. (See Exercise 15 of Sec- tion 6.3.) (b) rank(T∗ T) = rank(TT∗ ) = rank(T). 19.Let T and U be positive definite operators on an inner product space V. Prove the following results. (a)(b)(c)T + U is positive definite. If c > 0, then cT is positive definite. T−1 is positive definite. 20.Let V be an inner product space with inner product · , · , and let T be a positive definite linear operator on V. Prove that x, y = T(x), y defines another inner product on V. 21.Let V be a finite-dimensional inner product space, and let T and U be self-adjoint operators on V such that T is positive definite. Prove that both TU and UT are diagonalizable linear operators that have only real eigenvalues. Hint: Show that UT is self-adjoint with respect to the inner product x, y = T(x), y. To show that TU is self-adjoint, repeat the argument with T−1 in place of T. 22.This exercise provides a converse to Exercise 20. Let V be a finite- dimensional inner product space with inner product · , · , and let · , · be any other inner product on V. (a) Prove that there exists a unique linear operator T on V such that x, y = T(x), y for all x and y in V. Hint: Let β = {v1 , v2 , . . . , vn } be an orthonormal basis for V with respect to · , · , and define a matrix A by Aij = vj , vi for all i and j. Let T be the unique linear operator on V such that [T]β = A. (b) Prove that the operator T of (a) is positive definite with respect to both inner products. 23.Let U be a diagonalizable linear operator on a finite-dimensional inner product space V such that all of the eigenvalues of U are real. Prove that there exist positive definite linear operators T1 and T 1 and self-adjoint linear operators T2 and T 2 such that U = T2 T1 = T 1 T 2 . Hint: Let · , · be the inner product associated with V, β a basis of eigenvectors for U, · , · the inner product on V with respect to which β is orthonormal (see Exercise 22(a) of Section 6.1), and T1 the positive definite operator according to Exercise 22. Show that U is self-adjoint with respect to · , · and U = T−1 1 U∗ T1 (the adjoint is with respect to · , · ). Let = T1−1 U∗ . T2Sec. 6.5 Unitary and Orthogonal Operators and Their Matrices 379 24.This argument gives another proof of Schur’s theorem. Let T be a linear operator on a finite dimensional inner product space V. (a)(b)Suppose that β is an ordered basis for V such that [T]β is an upper triangular matrix. Let γ be the orthonormal basis for V obtained by applying the Gram–Schmidt orthogonalization process to β and then normalizing the resulting vectors. Prove that [T]γ is an upper triangular matrix. Use Exercise 32 of Section 5.4 and (a) to obtain an alternate proof of Schur’s theorem. 1.Label the following statements as true or false. Assume that the under- lying inner product spaces are finite-dimensional. (a) Every unitary operator is normal. (b) Every orthogonal operator is diagonalizable. (c) A matrix is unitary if and only if it is invertible. (d) If two matrices are unitarily equivalent, then they are also similar. (e) The sum of unitary matrices is unitary. (f ) The adjoint of a unitary operator is unitary. (g) If T is an orthogonal operator on V, then [T]β is an orthogonal matrix for any ordered basis β for V. (h) If all the eigenvalues of a linear operator are 1, then the operator must be unitary or orthogonal. (i) A linear operator may preserve the norm, but not the inner prod- uct. 2. For each of the following matrices A, find an orthogonal or unitary matrix P and a diagonal matrix D such that P ∗ AP = D. 1 2 0 −1 2 3 − 3i (a) (b) (c) 2 1 1 0 3 + 3i 5 ⎛ ⎞ ⎛ ⎞ 0 2 2 2 1 1 (d) ⎝2 0 2⎠ (e) ⎝1 2 1⎠ 2 2 0 1 1 2 3. Prove that the composite of unitary [orthogonal] operators is unitary [orthogonal]. Sec. 6.5 Unitary and Orthogonal Operators and Their Matrices 393 4.For z ∈ C, define Tz : C → C by Tz (u) = zu. Characterize those z for which Tz is normal, self-adjoint, or unitary. 5. Which of the following pairs of matrices are unitarily equivalent? ⎛ ⎞ 1 0 0 1 0 1 0 12 (a) and (b) and ⎝ 1 ⎠ 0 1 1 0 1 0 0 2 ⎛ ⎞ ⎛ ⎞ 0 1 0 2 0 0 (c) ⎝−1 0 0⎠ and ⎝0 −1 0⎠ 0 0 1 0 0 0 ⎛ ⎞ ⎛ ⎞ 0 1 0 1 0 0 (d) ⎝−1 0 0⎠ and ⎝0 i 0⎠ 0 0 1 0 0 −i ⎛ ⎞ ⎛ ⎞ 1 1 0 1 0 0 (e) ⎝0 2 2⎠ and ⎝0 2 0⎠ 0 0 3 0 0 3 6.Let V be the inner product space of complex-valued continuous func- tions on [0, 1] with the inner product

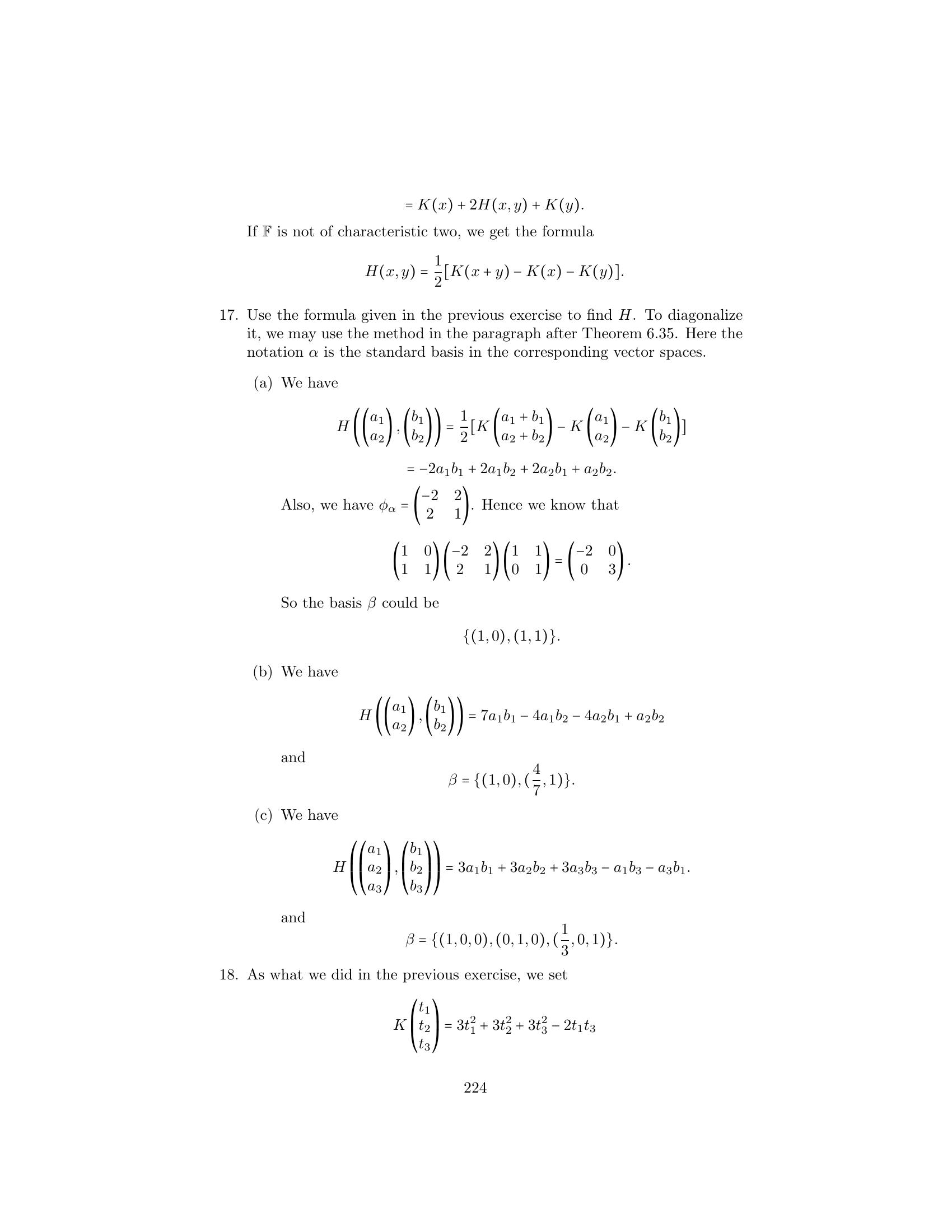

1 f, g = f (t)g(t) dt. 0 Let h ∈ V, and define T : V → V by T(f ) = hf . Prove that T is a unitary operator if and only if |h(t)| = 1 for 0 ≤ t ≤ 1. 7.Prove that if T is a unitary operator on a finite-dimensional inner prod- uct space V, then T has a unitary square root; that is, there exists a unitary operator U such that T = U2 . 8.Let T be a self-adjoint linear operator on a finite-dimensional inner product space. Prove that (T + iI)(T − iI)−1 is unitary using Exercise 10 of Section 6.4. 9.Let U be a linear operator on a finite-dimensional inner product space V. If U(x) = x for all x in some orthonormal basis for V, must U be unitary? Justify your answer with a proof or a counterexample. 10. Let A be an n × n real symmetric or complex normal matrix. Prove that n n tr(A) = λi and tr(A∗ A) = |λi |2 , i=1 i=1 where the λi ’s are the (not necessarily distinct) eigenvalues of A. 394 11.12.13.14.Chap. 6 Inner Product Spaces Find an orthogonal matrix whose first row is ( 13 , 23 , 23 ). Let A be an n × n real symmetric or complex normal matrix. Prove that n det(A) = λi , i=1 where the λi ’s are the (not necessarily distinct) eigenvalues of A. Suppose that A and B are diagonalizable matrices. Prove or disprove that A is similar to B if and only if A and B are unitarily equivalent. Prove that if A and B are unitarily equivalent matrices, then A is pos- itive definite [semidefinite] if and only if B is positive definite [semidef- inite]. (See the definitions in the exercises in Section 6.4.) 15.Let U be a unitary operator on an inner product space V, and let W be a finite-dimensional U-invariant subspace of V. Prove that (a) U(W) = W; (b) W ⊥ is U-invariant. Contrast (b) with Exercise 16. 16.Find an example of a unitary operator U on an inner product space and a U-invariant subspace W such that W⊥ is not U-invariant. 17.Prove that a matrix that is both unitary and upper triangular must be a diagonal matrix. 18. Show that “is unitarily equivalent to” is an equivalence relation on Mn×n (C). 19.Let W be a finite-dimensional subspace of an inner product space V. By Theorem 6.7 (p. 352) and the exercises of Section 1.3, V = W ⊕ W⊥ . Define U : V → V by U(v1 + v2 ) = v1 − v2 , where v1 ∈ W and v2 ∈ W⊥ . Prove that U is a self-adjoint unitary operator. 20.Let V be a finite-dimensional inner product space. A linear operator U on V is called a partial isometry if there exists a subspace W of V such that U(x) = x for all x ∈ W and U(x) = 0 for all x ∈ W⊥ . Observe that W need not be U-invariant. Suppose that U is such an operator and {v1 , v2 , . . . , vk } is an orthonormal basis for W. Prove the following results. (a)(b)U(x), U(y) = x, y for all x, y ∈ W. Hint: Use Exercise 20 of Section 6.1. {U(v1 ), U(v2 ), . . . , U(vk )} is an orthonormal basis for R(U). Sec. 6.5 Unitary and Orthogonal Operators and Their Matrices 395 (c)(d)(e)(f )There exists an orthonormal basis γ for V such that the first k columns of [U]γ form an orthonormal set and the remaining columns are zero. Let {w1 , w2 , . . . , wj } be an orthonormal basis for R(U)⊥ and β = {U(v1 ), U(v2 ), . . . , U(vk ), w1 , . . . , wj }. Then β is an orthonormal basis for V. Let T be the linear operator on V that satisfies T(U(vi )) = vi (1 ≤ i ≤ k) and T(wi ) = 0 (1 ≤ i ≤ j). Then T is well defined, and T = U∗ . Hint: Show that U(x), y = x, T(y) for all x, y ∈ β. There are four cases. U∗ is a partial isometry. This exercise is continued in Exercise 9 of Section 6.6. 21.Let A and B be n × n matrices that are unitarily equivalent. (a) Prove that tr(A∗ A) = tr(B ∗B). (b) Use (a) to prove that n n |Aij |2 = |Bij |2 . i,j=1 i,j=1 (c) Use (b) to show that the matrices 1 2 and 2 i i14 1 are not unitarily equivalent. 22.Let V be a real inner product space. (a) Prove that any translation on V is a rigid motion. (b) Prove that the composite of any two rigid motions on V is a rigid motion on V. 23.Prove the following variation of Theorem 6.22: If f : V → V is a rigid motion on a finite-dimensional real inner product space V, then there exists a unique orthogonal operator T on V and a unique translation g on V such that f = T ◦ g. 24. Let T and U be orthogonal operators on R2 . Use Theorem 6.23 to prove the following results. (a) If T and U are both reflections about lines through the origin, then UT is a rotation. (b) If T is a rotation and U is a reflection about a line through the origin, then both UT and TU are reflections about lines through the origin. 396 Chap. 6 Inner Product Spaces 25. Suppose that T and U are reflections of R2 about the respective lines L and L through the origin and that φ and ψ are the angles from the positive x-axis to L and L , respectively. By Exercise 24, UT is a rotation. Find its angle of rotation. 26.27.Suppose that T and U are orthogonal operators on R2 such that T is the rotation by the angle φ and U is the reflection about the line L through the origin. Let ψ be the angle from the positive x-axis to L. By Exercise 24, both UT and TU are reflections about lines L1 and L2 , respectively, through the origin. (a)(b)Find the angle θ from the positive x-axis to L1 . Find the angle θ from the positive x-axis to L2 . Find new coordinates x , y so that the following quadratic forms can be written as λ1 (x )2 + λ2 (y )2 . (a) (b) (c) (d) (e) x2 + 4xy + y2 2x2 + 2xy + 2y 2 x2 − 12xy − 4y 2 3x2 + 2xy + 3y 2 x2 − 2xy + y2 28.Consider the expression X t AX, where X t = (x, y, z) and A is as defined in Exercise 2(e). Find a change of coordinates x , y , z so that the preceding expression is of the form λ1 (x )2 + λ2 (y )2 + λ3 (z )2 . 29.QR-Factorization. Let w1 , w2 , . . . , wn be linearly independent vectors in Fn , and let v1 , v2 , . . . , vn be the orthogonal vectors obtained from w1 , w2 , . . . , wn by the Gram–Schmidt process. Let u1 , u2, . . . , un be the orthonormal basis obtained by normalizing the vi ’s. (a) Solving (1) in Section 6.2 for wk in terms of uk , show that k−1 wk = vk uk + wk , uj uj j=1 (1 ≤ k ≤ n). (b)Let A and Q denote the n × n matrices in which the kth columns are wk and uk , respectively. Define R ∈ Mn×n (F ) by ⎧ ⎪ ⎨vj if j = k Rjk = wk , uj if j < k ⎪ ⎩ 0 if j > k. (c)Prove A = QR. Compute Q and R as in (b) for the 3×3 matrix whose columns are the vectors w1 , w2 , w3 , respectively, in Example 4 of Section 6.2. Sec. 6.5 Unitary and Orthogonal Operators and Their Matrices 397 (d)(e)Since Q is unitary [orthogonal] and R is upper triangular in (b), we have shown that every invertible matrix is the product of a uni- tary [orthogonal] matrix and an upper triangular matrix. Suppose that A ∈ Mn×n (F ) is invertible and A = Q1 R1 = Q2 R2 , where Q1 , Q2 ∈ Mn×n (F ) are unitary and R1 , R2 ∈ Mn×n (F ) are upper −1triangular. Prove that D = R2 R1 is a unitary diagonal matrix. Hint: Use Exercise 17. The QR factorization described in (b) provides an orthogonaliza- tion method for solving a linear system Ax = b when A is in- vertible. Decompose A to QR, by the Gram–Schmidt process or other means, where Q is unitary and R is upper triangular. Then QRx = b, and hence Rx = Q∗ b. This last system can be easily solved since R is upper triangular. 1 Use the orthogonalization method and (c) to solve the system x1 + 2x2 + 2x3 = 1 x1 + 2x3 = 11 x2 + x3 = −1. 30.Suppose that β and γ are ordered bases for an n-dimensional real [com- plex] inner product space V. Prove that if Q is an orthogonal [unitary] n × n matrix that changes γ-coordinates into β-coordinates, then β is orthonormal if and only if γ is orthonormal. The following definition is used in Exercises 31 and 32. Definition. Let V be a finite-dimensional complex [real] inner product space, and let u be a unit vector in V. Define the Householder operator Hu : V → V by Hu (x) = x − 2 x, u u for all x ∈ V. 31.Let Hu be a Householder operator on a finite-dimensional inner product space V. Prove the following results. (a) Hu is linear. (b) Hu (x) = x if and only if x is orthogonal to u. (c) Hu (u) = −u. (d) H∗ u = Hu and H2 u = I, and hence Hu is a unitary [orthogonal] operator on V. (Note: If V is a real inner product space, then in the language of Sec- tion 6.11, Hu is a reflection.) 1 At one time, because of its great stability, this method for solving large sys- tems of linear equations with a computer was being advocated as a better method than Gaussian elimination even though it requires about three times as much work. (Later, however, J. H. Wilkinson showed that if Gaussian elimination is done “prop- erly,” then it is nearly as stable as the orthogonalization method.) 398 Chap. 6 Inner Product Spaces 32.Let V be a finite-dimensional inner product space over F . Let x and y be linearly independent vectors in V such that x = y. (a) If F = C, prove that there exists a unit vector u in V and a complex number θ with |θ| = 1 such that Hu (x) = θy. Hint: Choose θ so 1 that x, θy is real, and set u = (x − θy). x − θy (b) If F = R, prove that there exists a unit vector u in V such that Hu (x) = y. 1.Label the following statements as true or false. Assume that the under- lying inner product spaces are finite-dimensional. (a) All projections are self-adjoint. (b) An orthogonal projection is uniquely determined by its range. (c) Every self-adjoint operator is a linear combination of orthogonal projections. 404 Chap. 6 Inner Product Spaces (d) If T is a projection on W, then T(x) is the vector in W that is closest to x. (e) Every orthogonal projection is a unitary operator. 2.Let V = R2 , W = span({(1, 2)}), and β be the standard ordered basis for V. Compute [T]β , where T is the orthogonal projection of V on W. Do the same for V = R3 and W = span({(1, 0, 1)}). 3. For each of the matrices A in Exercise 2 of Section 6.5: (1) Verify that LA possesses a spectral decomposition. (2) For each eigenvalue of LA , explicitly define the orthogonal projec- tion on the corresponding eigenspace. (3) Verify your results using the spectral theorem. 4.Let W be a finite-dimensional subspace of an inner product space V. Show that if T is the orthogonal projection of V on W, then I − T is the orthogonal projection of V on W ⊥. 5.Let T be a linear operator on a finite-dimensional inner product space V. (a) If T is an orthogonal projection, prove that T(x) ≤ x for all x ∈ V. Give an example of a projection for which this inequality does not hold. What can be concluded about a projection for which the inequality is actually an equality for all x ∈ V? (b) Suppose that T is a projection such that T(x) ≤ x for x ∈ V. Prove that T is an orthogonal projection. 6.Let T be a normal operator on a finite-dimensional inner product space. Prove that if T is a projection, then T is also an orthogonal projection. 7.Let T be a normal operator on a finite-dimensional complex inner prod- uct space V. Use the spectral decomposition λ1 T1 + λ2 T2 + · · · + λk Tk of T to prove the following results. (a) If g is a polynomial, then k g(T) = g(λi )Ti . i=1 (b)(c)(d)(e)(f )If Tn = T0 for some n, then T = T0 . Let U be a linear operator on V. Then U commutes with T if and only if U commutes with each Ti . There exists a normal operator U on V such that U2 = T. T is invertible if and only if λi = 0 for 1 ≤ i ≤ k. T is a projection if and only if every eigenvalue of T is 1 or 0. Sec. 6.7 The Singular Value Decomposition and the Pseudoinverse 405 (g) T = −T∗ if and only if every λi is an imaginary number. 8. Use Corollary 1 of the spectral theorem to show that if T is a normal operator on a complex finite-dimensional inner product space and U is a linear operator that commutes with T, then U commutes with T∗ . 9.Referring to Exercise 20 of Section 6.5, prove the following facts about a partial isometry U. (a) U∗ U is an orthogonal projection on W. (b) UU∗ U = U. 10.Simultaneous diagonalization. Let U and T be normal operators on a finite-dimensional complex inner product space V such that TU = UT. Prove that there exists an orthonormal basis for V consisting of vectors that are eigenvectors of both T and U. Hint: Use the hint of Exercise 14 of Section 6.4 along with Exercise 8. 11. Prove (c) of the spectral theorem. 1. Label the following statements as true or false. (a) The singular values of any linear operator on a finite-dimensional vector space are also eigenvalues of the operator. (b) The singular values of any matrix A are the eigenvalues of A∗ A. (c) For any matrix A and any scalar c, if σ is a singular value of A, then |c|σ is a singular value of cA. (d) The singular values of any linear operator are nonnegative. (e) If λ is an eigenvalue of a self-adjoint matrix A, then λ is a singular value of A. (f ) For any m×n matrix A and any b ∈ Fn , the vector A† b is a solution to Ax = b. (g) The pseudoinverse of any linear operator exists even if the operator is not invertible. 2. Let T : V → W be a linear transformation of rank r, where V and W are finite-dimensional inner product spaces. In each of the following, find orthonormal bases {v1 , v2 , . . . , vn } for V and {u1 , u2 , . . . , um } for W, and the nonzero singular values σ1 ≥ σ2 ≥ · · · ≥ σr of T such that T(vi ) = σi ui for 1 ≤ i ≤ r. (a) T : R2 → R3 defined by T(x1 , x2 ) = (x1 , x1 + x2 , x1 − x2 ) (b) T : P2 (R) → P1 (R), where T(f (x)) = f (x), and the inner prod- ucts are defined as in Example 1 (c) Let V = W = span({1, sin x, cos x}) with the inner product defined 2π by f, g = 0 f (t)g(t) dt, and T is defined by T(f ) = f + 2f (d) T : C2 → C2 defined by T(z1 , z2 ) = ((1 − i)z2, (1 + i)z1 + z2 ) 3. Find a singular value decomposition for each of the following matrices. ⎛ ⎞ ⎛ ⎞ 1 1 1 1 1 0 1 ⎜0 1⎟ (a) ⎝ 1 1⎠ (b) (c) ⎜ ⎟ 1 0 −1 ⎝1 0⎠ −1 −1 1 1 ⎛ ⎞ ⎛ ⎞ 1 1 1 1 1 1 1 (d) ⎝1 −1 0⎠ (e) 1+ i 1 (f ) ⎝1 0 −2 1⎠ 1 − i −i 1 0 −1 1 −1 1 1 4. Find a polar decomposition for each of the following matrices. ⎛ ⎞ 20 4 0 (a) 1 1 (b) ⎝ 0 0 1⎠ 2 −2 4 20 0 5. Find an explicit formula for each of the following expressions. Sec. 6.7 The Singular Value Decomposition and the Pseudoinverse 419 (a) T† (x1 , x2 , x3 ), where T is the linear transformation of Exercise 2(a) (b) T† (a + bx + cx2 ), where T is the linear transformation of Exer- cise 2(b) (c) T† (a + b sin x + c cos x), where T is the linear transformation of Exercise 2(c) (d) T† (z1 , z2 ), where T is the linear transformation of Exercise 2(d) 6. Use the results of Exercise 3 to find the pseudoinverse of each of the following matrices. ⎛ ⎞ ⎛ ⎞ 1 1 1 1 1 0 1 ⎜0 1⎟ (a) ⎝ 1 1⎠ (b) (c) ⎜ ⎟ 1 0 −1 ⎝1 0⎠ −1 −1 1 1 ⎛ ⎞ ⎛ ⎞ 1 1 1 1 1 1 1 (d) ⎝1 −1 0⎠ (e) 1+ i 1 (f ) ⎝1 0 −2 1⎠ 1 − i −i 1 0 −1 1 −1 1 1 7. For each of the given linear transformations T : V → W, (i) Describe the subspace Z1 of V such that T† T is the orthogonal projection of V on Z1 . (ii) Describe the subspace Z2 of W such that TT† is the orthogonal projection of W on Z2 . (a) T is the linear transformation of Exercise 2(a) (b) T is the linear transformation of Exercise 2(b) (c) T is the linear transformation of Exercise 2(c) (d) T is the linear transformation of Exercise 2(d) 8.9.For each of the given systems of linear equations, (i) If the system is consistent, find the unique solution having mini- mum norm. (ii) If the system is inconsistent, find the “best approximation to a solution” having minimum norm, as described in Theorem 6.30(b). (Use your answers to parts (a) and (f) of Exercise 6.) x1 + x2 = 1 x1 + x 2 + x3 + x4 = 2 (a) x1 + x2 = 2 (b) x1 − 2x3 + x4 = −1 −x1 + −x2 = 0 x1 − x 2 + x3 + x4 = 2 Let V and W be finite-dimensional inner product spaces over F , and sup- pose that {v1 , v2 , . . . , vn } and {u1 , u2 , . . . , um } are orthonormal bases for V and W, respectively. Let T : V → W is a linear transformation of rank r, and suppose that σ1 ≥ σ2 ≥ · · · ≥ σr > 0 are such that σ i ui if 1 ≤ i ≤ r T(vi ) = 0 if r < i. 420 Chap. 6 Inner Product Spaces (a)Prove that {u1 , u2 , . . . , um } is a set of eigenvectors of TT∗ with corresponding eigenvalues λ1 , λ2 , . . . , λm , where σi 2 if 1 ≤ i ≤ r λi = 0 if r < i. (b)(c)(d)Let A be an m × n matrix with real or complex entries. Prove that the nonzero singular values of A are the positive square roots of the nonzero eigenvalues of AA∗ , including repetitions. Prove that TT∗ and T∗ T have the same nonzero eigenvalues, in- cluding repetitions. State and prove a result for matrices analogous to (c). 10.Use Exercise 8 of Section 2.5 to obtain another proof of Theorem 6.27, the singular value decomposition theorem for matrices. 11.This exercise relates the singular values of a well-behaved linear operator or matrix to its eigenvalues. (a)(b)Let T be a normal linear operator on an n-dimensional inner prod- uct space with eigenvalues λ1 , λ2 , . . . , λn . Prove that the singular values of T are |λ1 |, |λ2 |, . . . , |λn |. State and prove a result for matrices analogous to (a). 12.Let A be a normal matrix with an orthonormal basis of eigenvectors β = {v1 , v2 , . . . , vn } and corresponding eigenvalues λ1 , λ2 , . . . , λn . Let V be the n × n matrix whose columns are the vectors in β. Prove that for each i there is a scalar θi of absolute value 1 such that if U is the n × n matrix with θi vi as column i and Σ is the diagonal matrix such that Σii = |λi | for each i, then U ΣV ∗ is a singular value decomposition of A. 13.14.Prove that if A is a positive semidefinite matrix, then the singular values of A are the same as the eigenvalues of A. ∗Prove that if A is a positive definite matrix and A = U ΣV is a singular value decomposition of A, then U = V . 15.Let A be a square matrix with a polar decomposition A = WP . (a) Prove that A is normal if and only if WP 2 = P 2 W . (b) Use (a) to prove that A is normal if and only if WP = P W . 16. Let A be a square matrix. Prove an alternate form of the polar de- composition for A: There exists a unitary matrix W and a positive semidefinite matrix P such that A = P W . Sec. 6.7 The Singular Value Decomposition and the Pseudoinverse 421 17. Let T and U be linear operators on R2 defined for all (x1 , x2 ) ∈ R2 by 18.T(x1 , x2 ) = (x1 , 0) and U(x1, x2 ) = (x1 + x2 , 0). (a) Prove that (UT)† = T† U† . (b) Exhibit matrices A and B such that AB is defined, but (AB)† = B † A† . Let A be an m × n matrix. Prove the following results. (a) For any m × m unitary matrix G, (GA)† = A† G∗ . (b) For any n × n unitary matrix H, (AH)† = H ∗A† . 19. Let A be a matrix with real or complex entries. Prove the following results. (a)(b)(c)The nonzero singular values of A are the same as the nonzero singular values of A∗ , which are the same as the nonzero singular values of At . (A† )∗ = (A∗ )† . (A† )t = (At )† . 20. Let A be a square matrix such that A2 = O. Prove that (A† )2 = O. 21. Let V and W be finite-dimensional inner product spaces, and let T : V → W be linear. Prove the following results. (a) TT† T = T. (b) T† TT† = T† . (c) Both T† T and TT† are self-adjoint. The preceding three statements are called the Penrose conditions, and they characterize the pseudoinverse of a linear transformation as shown in Exercise 22. 22.Let V and W be finite-dimensional inner product spaces. Let T : V → W and U : W → V be linear transformations such that TUT = T, UTU = U, and both UT and TU are self-adjoint. Prove that U = T† . 23.State and prove a result for matrices that is analogous to the result of Exercise 21. 24.State and prove a result for matrices that is analogous to the result of Exercise 22. 25. Let V and W be finite-dimensional inner product spaces, and let T : V → W be linear. Prove the following results. (a) If T is one-to-one, then T∗ T is invertible and T† = (T∗ T)−1 T∗ . (b) If T is onto, then TT∗ is invertible and T† = T∗ (TT∗ )−1 . 422 Chap. 6 Inner Product Spaces 26.Let V and W be finite-dimensional inner product spaces with orthonor- mal bases β and γ, respectively, and let T : V → W be linear. Prove that ([T]γβ )† = [T† ]βγ . 27. Let V and W be finite-dimensional inner product spaces, and let T : V → W be a linear transformation. Prove part (b) of the lemma to Theorem 6.30: TT† is the orthogonal projection of W on R(T). 1.Label(a) (b) (c) (d) (e) (f ) (g) (h) (i) (j) the following statements as true or false. Every quadratic form is a bilinear form. If two matrices are congruent, they have the same eigenvalues. Symmetric bilinear forms have symmetric matrix representations. Any symmetric matrix is congruent to a diagonal matrix. The sum of two symmetric bilinear forms is a symmetric bilinear form. Two symmetric matrices with the same characteristic polynomial are matrix representations of the same bilinear form. There exists a bilinear form H such that H(x, y) = 0 for all x and y. If V is a vector space of dimension n, then dim(B(V )) = 2n. Let H be a bilinear form on a finite-dimensional vector space V with dim(V) > 1. For any x ∈ V, there exists y ∈ V such that y = 0 , but H(x, y) = 0. If H is any bilinear form on a finite-dimensional real inner product space V, then there exists an ordered basis β for V such that ψβ (H) is a diagonal matrix. 2. Prove properties 1, 2, 3, and 4 on page 423. 3.(a)(b)(c)Prove that the sum of two bilinear forms is a bilinear form. Prove that the product of a scalar and a bilinear form is a bilinear form. Prove Theorem 6.31. 4.Determine which of the mappings that follow are bilinear forms. Justify your answers. (a)Let V = C[0, 1] be the space of continuous real-valued functions on the closed interval [0, 1]. For f, g ∈ V, define

1 H(f, g) = f (t)g(t)dt. 0 (b) Let V be a vector space over F , and let J ∈ B(V) be nonzero. Define H : V × V → F by H(x, y) = [J(x, y)]2 for all x, y ∈ V. 448 Chap. 6 Inner Product Spaces (c)(d)(e)(f )Define H : R × R → R by H(t1 , t2 ) = t1 + 2t2 . Consider the vectors of R2 as column vectors, and let H : R2 → R be the function defined by H(x, y) = det(x, y), the determinant of the 2 × 2 matrix with columns x and y. Let V be a real inner product space, and let H : V × V → R be the function defined by H(x, y) = x, y for x, y ∈ V. Let V be a complex inner product space, and let H : V × V → C be the function defined by H(x, y) = x, y for x, y ∈ V. 5. Verify that each of the given mappings is a bilinear form. Then compute its matrix representation with respect to the given ordered basis β. (a) H : R3 × R3 → R, where ⎛⎛ ⎞ ⎛ ⎞⎞ a1 b1 H ⎝⎝a2 ⎠ , ⎝b2 ⎠⎠ = a1 b1 − 2a1 b2 + a2 b1 − a3 b3 a3 b3 and ⎧⎛ ⎞ ⎛ ⎞ ⎛ ⎞⎫ ⎨ 1 1 0 ⎬ β = ⎝0⎠ , ⎝ 0⎠ , ⎝1⎠ . ⎩ ⎭ 1 −1 0 (b) Let V = M2×2 (R) and 1 0 0 1 0 0 0 0 β = , , , . 0 0 0 0 1 0 0 1 Define H : V × V → R by H(A, B) = tr(A)· tr(B). (c) Let β = {cos t, sin t, cos 2t, sin 2t}. Then β is an ordered basis for V = span(β), a four-dimensional subspace of the space of all continuous functions on R. Let H : V × V → R be the function defined by H(f, g) = f (0) · g (0). 6. Let H : R2 → R be the function defined by a1 b1 a1 b 1 H , = a1 b2 + a2 b1 for , ∈ R2 . a2 b2 a2 b2 (a) Prove that H is a bilinear form. (b) Find the 2× 2 matrix A such that H(x, y) = xtAy for all x, y ∈ R2 . For a 2 × 2 matrix M with columns x and y, the bilinear form H(M ) = H(x, y) is called the permanent of M . 7.Let V and W be vector spaces over the same field, and let T : V → W be a linear transformation. For any H ∈ B(W), define T(H)

: V × V → F by T(H)(x,

y) = H(T(x), T(y)) for all x, y ∈ V. Prove the following results. Sec. 6.8 Bilinear and Quadratic Forms (a) If H ∈ B(W), then T(H)

∈ B(V). (b) T

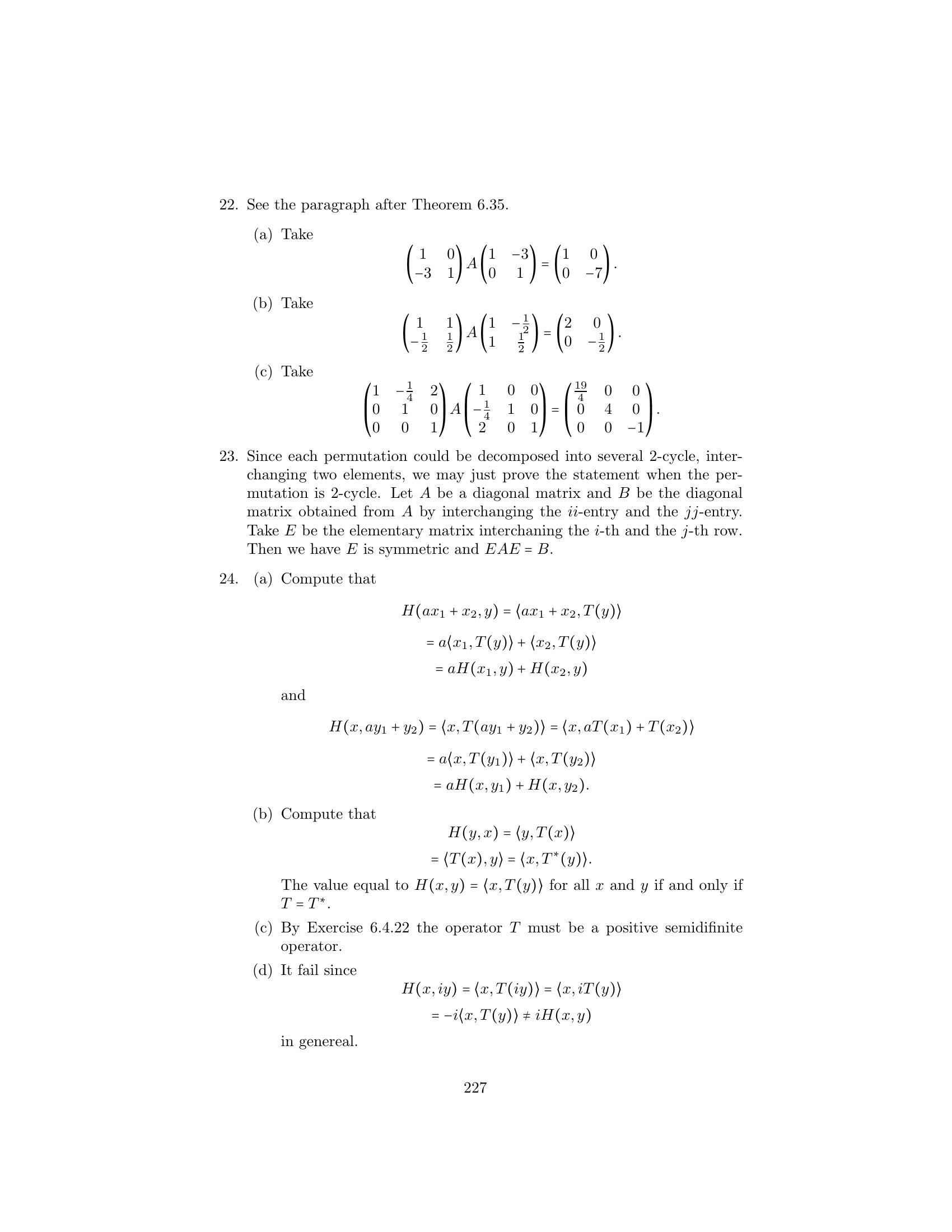

: B(W) → B(V) is a linear transformation. (c) If T is an isomorphism, then so is T.

449 8.Assume the notation of Theorem 6.32. (a)(b)(c)Prove that for any ordered basis β, ψβ is linear. Let β be an ordered basis for an n-dimensional space V over F , and let φβ : V → Fn be the standard representation of V with respect to β. For A ∈ Mn×n (F ), define H : V × V → F by H(x, y) = [φβ (x)]t A[φβ (y)]. Prove that H ∈ B(V). Can you establish this as a corollary to Exercise 7? Prove the converse of (b): Let H be a bilinear form on V. If A = ψβ (H), then H(x, y) = [φβ (x)]t A[φβ (y)]. 9. (a) Prove Corollary 1 to Theorem 6.32. (b) For a finite-dimensional vector space V, describe a method for finding an ordered basis for B(V). 10. Prove Corollary 2 to Theorem 6.32. 11.12.13.14.15.16.Prove Corollary 3 to Theorem 6.32. Prove that the relation of congruence is an equivalence relation. The following outline provides an alternative proof to Theorem 6.33. (a) Suppose that β and γ are ordered bases for a finite-dimensional vector space V, and let Q be the change of coordinate matrix changing γ-coordinates to β-coordinates. Prove that φβ = LQ φγ , where φβ and φγ are the standard representations of V with respect to β and γ, respectively. (b) Apply Corollary 2 to Theorem 6.32 to (a) to obtain an alternative proof of Theorem 6.33. Let V be a finite-dimensional vector space and H ∈ B(V). Prove that, for any ordered bases β and γ of V, rank(ψβ (H)) = rank(ψγ (H)). Prove the following results. (a) Any square diagonal matrix is symmetric. (b) Any matrix congruent to a diagonal matrix is symmetric. (c) the corollary to Theorem 6.35 Let V be a vector space over a field F not of characteristic two, and let H be a symmetric bilinear form on V. Prove that if K(x) = H(x, x) is the quadratic form associated with H, then, for all x, y ∈ V, 1 H(x, y) = [K(x + y) − K(x) − K(y)]. 2 450 Chap. 6 Inner Product Spaces 17.For each of the given quadratic forms K on a real inner product space V, find a symmetric bilinear form H such that K(x) = H(x, x) for all x ∈ V. Then find an orthonormal basis β for V such that ψβ (H) is a diagonal matrix. t 1(a) K : R2 → R defined by K = −2t21 + 4t1 t2 + t22 t2 t 1(b) K : R2 → R defined by K = 7t21 − 8t1 t2 + t22 t2 ⎛ ⎞ t1 (c) K : R3 → R defined by K ⎝t2 ⎠ = 3t21 + 3t22 + 3t23 − 2t1 t3 t3 18.Let S be the set of all (t1 , t2 , t3 ) ∈ R3 for which √ 3t21 + 3t22 + 3t23 − 2t1 t3 + 2 2(t1 + t3 ) + 1 = 0. Find an orthonormal basis β for R3 for which the equation relating the coordinates of points of S relative to β is simpler. Describe S geometrically. 19.Prove the following refinement of Theorem 6.37(d). (a) If 0 < rank(A) < n and A has no negative eigenvalues, then f has no local maximum at p. (b) If 0 < rank(A) < n and A has no positive eigenvalues, then f has no local minimum at p. 20. Prove the following variation of the second-derivative test for the case n = 2: Define $ 2 %$ % $ 2 %2 ∂ f (p) ∂ 2f (p) ∂ f (p) D = − . ∂t21 ∂t22 ∂t1 ∂t2 (a) (b) (c) (d) If D > 0 and ∂ 2 f (p)/∂t21 > 0, then f has a local minimum at p. If D > 0 and ∂ 2 f (p)/∂t21 < 0, then f has a local maximum at p. If D < 0, then f has no local extremum at p. If D = 0, then the test is inconclusive. Hint: Observe that, as in Theorem 6.37, D = det(A) = λ1 λ2 , where λ1 and λ2 are the eigenvalues of A. 21.Let A and E be in Mn×n (F ), with E an elementary matrix. In Sec- tion 3.1, it was shown that AE can be obtained from A by means of an elementary column operation. Prove that E t A can be obtained by means of the same elementary operation performed on the rows rather than on the columns of A. Hint: Note that E t A = (At E)t . Sec. 6.9 Einstein’s Special Theory of Relativity 451 22. For each of the following matrices A with entries from R, find a diagonal matrix D and an invertible matrix Q such that Qt AQ = D. ⎛ ⎞ 3 1 2 (a) 1 3 (b) 0 1 (c) ⎝1 4 0⎠ 3 2 1 0 2 0 −1 Hint for (b): Use an elementary operation other than interchanging columns. 23.Prove that if the diagonal entries of a diagonal matrix are permuted, then the resulting diagonal matrix is congruent to the original one. 24. Let T be a linear operator on a real inner product space V, and define H : V × V → R by H(x, y) = x, T(y) for all x, y ∈ V. (a) Prove that H is a bilinear form. (b) Prove that H is symmetric if and only if T is self-adjoint. (c) What properties must T have for H to be an inner product on V? (d) Explain why H may fail to be a bilinear form if V is a complex inner product space. 25.26.Prove the converse to Exercise 24(a): Let V be a finite-dimensional real inner product space, and let H be a bilinear form on V. Then there exists a unique linear operator T on V such that H(x, y) = x, T(y) for all x, y ∈ V. Hint: Choose an orthonormal basis β for V, let A = ψβ (H), and let T be the linear operator on V such that [T]β = A. Apply Exercise 8(c) of this section and Exercise 15 of Section 6.2 (p. 355). Prove that the number of distinct equivalence classes of congruent n × n real symmetric matrices is (n + 1)(n + 2) . 2 1. Prove (b), (c), and (d) of Theorem 6.39. 2. Complete the proof of Theorem 6.40 for the case t < 0. 3. For ⎛ ⎞ ⎛ ⎞ 1 1 ⎜0⎟ ⎜ 0⎟ w1 = ⎜ ⎟ and w2 = ⎜ ⎟ , ⎝0⎠ ⎝ 0⎠1 −1 show that (a) {w1 , w2 } is an orthogonal basis for span({e1 , e4 }); (b) span({e1 , e 4 }) is T∗ v LA Tv -invariant. 4. Prove the corollary to Theorem 6.41. Hints: (a) Prove that ⎛ ⎞ p 0 0 q ∗ ⎜ 0 1 0 0⎟ Bv ABv = ⎜ ⎝ 0 0 1 0⎠ ⎟ , −q 0 0 −p where a + b a − b p = and q = . 2 2 462 (b)(c)Chap. 6 Inner Product Spaces ∗Show that q = 0 by using the fact that Bv ABv is self-adjoint. Apply Theorem 6.40 to ⎛ ⎞ 0 ⎜1⎟ w = ⎜ ⎟ ⎝0⎠ 1 to show that p = 1. 5.6.7.Derive (24), and prove that ⎛ ⎞ −v⎛ ⎞ √ 0 ⎜ 1 − v 2 ⎟ Tv ⎜0⎟ ⎝0⎠ ⎜ ⎟ = ⎜ ⎜ ⎜ ⎜ 0 0 ⎟ ⎟ ⎟ ⎟ . (25) 1 ⎝ 1 ⎠ √ 1 − v 2 Hint: Use a technique similar to the derivation of (22). Consider three coordinate systems S, S , and S with the corresponding axes (x,x ,x ; y,y ,y ; and z,z ,z ) parallel and such that the x-, x-, and x -axes coincide. Suppose that S is moving past S at a velocity v1 > 0 (as measured on S), S is moving past S at a velocity v2 > 0 (as measured on S ), and S is moving past S at a velocity v3 > 0 (as measured on S), and that there are three clocks C, C , and C such that C is stationary relative to S, C is stationary relative to S , and C is stationary relative to S . Suppose that when measured on any of the three clocks, all the origins of S, S , and S coincide at time 0. Assuming that Tv3 = Tv2 Tv1 (i.e., Bv3 = Bv2 Bv1 ), prove that v1 + v2 v3 = . 1 + v1 v2 Note that substituting v2 = 1 in this equation yields v3 = 1. This tells us that the speed of light as measured in S or S is the same. Why would we be surprised if this were not the case? Compute (Bv )−1 . Show (Bv )−1 = B(−v) . Conclude that if S moves at a negative velocity v relative to S, then [Tv ]β = Bv , where Bv is of the form given in Theorem 6.42. 8.Suppose that an astronaut left Earth in the year 2000 and traveled to a star 99 light years away from Earth at 99% of the speed of light and that upon reaching the star immediately turned around and returned to Earth at the same speed. Assuming Einstein’s special theory of Sec. 6.9 Einstein’s Special Theory of Relativity 463 relativity, show that if the astronaut was 20 years old at the time of departure, then he or she would return to Earth at age 48.2 in the year 2200. Explain the use of Exercise 7 in solving this problem. 9. Recall the moving space vehicle considered in the study of time contrac- tion. Suppose that the vehicle is moving toward a fixed star located on the x-axis of S at a distance b units from the origin of S. If the space vehicle moves toward the star at velocity v, Earthlings (who remain “al- most” stationary relative to S) compute the time it takes for the vehicle to reach the star as t = b/v. Due to the phenomenon √ of time contraction, √ the astronaut perceives a time span of t = t 1 − v 2 = (b/v) 1 − v 2 . A paradox appears in that the astronaut perceives a time span incon- sistent with a distance of b and a velocity of v. The paradox is resolved by observing that the distance from the solar system to the star as measured by the astronaut is less than b. Assuming that the coordinate systems S and S and clocks C and Care as in the discussion of time contraction, prove the following results. (a) At time t (as measured on C), the space–time coordinates of star relative to S and C are ⎛ ⎞ b ⎜0⎟ ⎜ ⎟ . ⎝0⎠ t (b) At time t (as measured on C), the space–time coordinates of the star relative to S and C are ⎛ ⎞ b − vt√ ⎜ 1 − v2 ⎟ ⎜ ⎟ ⎜ 0 ⎟ ⎜ ⎟ . ⎜ 0 ⎟ ⎝ t − bv ⎠ √ 1 − v2 (c) For b − tv t − bv x = √ and t = √ , 1 − v 2 1 − v 2 √ we have x = b 1 − v 2 − t v. This result may be interpreted to mean that at time t as measured by the astronaut, the distance from the astronaut to the star as measured by the astronaut (see Figure 6.9) is

b 1 − v 2 − t v. 464 Chap. 6 Inner Product Spaces z z 6 . . . . . . . . . . . . . . . . . . . . . . . . . 7 8 . . . . . . . . . . . . . .6 . . . . . . 9 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5 0 . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . . 4 . 1 . . . . . . . . . . . . . . . . . . . . . 2 3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . C 6 . . . . . . . . . . . . . . . . . . . . . . 8 7 . . . . . . . . . . . . . . . . .6 . . . . . . 9 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 0 5 . . . . . . . . . . . . . . . . . . . . . . . . . . . .. . . . . 4 . 1 . . . . . . . . . . . . . . . . . . . . 3 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . C (x , 0, 0) > r r Z ~ Z coordinates 1 y - 1 y - relative to S S x S x *(star) (b, 0, 0) coordinates Figure 6.9 relative to S (d) Conclude from the preceding equation that (1) the speed of the space vehicle relative to the star, as measured by the astronaut, is v; (2) the distance √ from Earth to the star, as measured by the astro- naut, is b 1 − v 2 . Thus distances along the line of √ motion of the space vehicle appear to be contracted by a factor of 1 − v 2 . 1.Label the following statements as true or false. (a) If Ax = b is well-conditioned, then cond(A) is small. (b) If cond(A) is large, then Ax = b is ill-conditioned. (c) If cond(A) is small, then Ax = b is well-conditioned. (d) The norm of A equals the Rayleigh quotient. (e) The norm of A always equals the largest eigenvalue of A. Sec. 6.10 Conditioning and the Rayleigh Quotient 471 2. Compute the norms of the following matrices. ⎛ ⎞ 1 −2 √ 0 3 (a) 4 0 (b) 5 3 (c) ⎜ −2 √ ⎟ 1 3 −3 3 ⎜ ⎝0 3 1⎟ ⎠ 0 √ 2 1 3 3. Prove that if B is symmetric, then B is the largest eigenvalue of B. 4. Let A and A−1 be as follows: ⎛ ⎞ ⎛ ⎞ 6 13 −17 6 −4 1 A = ⎝ 13 29 −38⎠ and A−1 = ⎝−4 11 7⎠ . −17 −38 50 −1 7 5 The eigenvalues of A are approximately 84.74, 0.2007, and 0.0588. (a) Approximate A, A−1 , and cond(A). (Note Exercise 3.) (b) Suppose that we have vectors x and x̃ such that Ax = b and b − Ax̃ ≤ 0.001. Use (a) to determine upper bounds for x̃ − A−1 b (the absolute error) and x̃ − A−1 b/A−1 b (the rel- ative error). 5.Suppose that x is the actual solution of Ax = b and that a computer arrives at an approximate solution x̃. If cond(A) = 100, b = 1, and b − Ax̃ = 0.1, obtain upper and lower bounds for x − x̃/x. 6. Let ⎛ ⎞ 2 1 1 B = ⎝1 2 1⎠ . 1 1 2 Compute ⎛ ⎞ 1 R ⎝−2⎠ , B, and cond(B). 3 7.8.Let B be a symmetric matrix. Prove that min R(x) equals the smallest x=0 eigenvalue of B. Prove that if λ is an eigenvalue of AA∗ , then λ is an eigenvalue of A∗ A. This completes the proof of the lemma to Corollary 2 to Theorem 6.43. 9. Prove that if A is an invertible matrix and Ax = b, then 1 δb δx ≤ . A · A−1 b x 472 Chap. 6 Inner Product Spaces 10. Prove the left inequality of (a) in Theorem 6.44. 11.Prove that cond(A) = 1 if and only if A is a scalar multiple of a unitary or orthogonal matrix. 12. (a) Let A and B be square matrices that are unitarily equivalent. Prove that A = B. (b) Let T be a linear operator on a finite-dimensional inner product space V. Define (c)T(x) T = max . x=0 x Prove that T = [T]β , where β is any orthonormal basis for V. Let V be an infinite-dimensional inner product space with an or- thonormal basis {v1 , v2 , . . .}. Let T be the linear operator on V such that T(vk ) = kvk . Prove that T (defined in (b)) does not exist. The next exercise assumes the definitions of singular value and pseudoinverse and the results of Section 6.7. 13.Let A be an n × n matrix of rank r with the nonzero singular values σ1 ≥ σ2 ≥ · · · ≥ σr . Prove each of the following results. (a) A = σ1 . 1 (b) A† = . σr σ1 (c) If A is invertible (and hence r = n), then cond(A) = . σn

'Solution Manuals > Linear Algebra, 4th Edition: Friedberg' 카테고리의 다른 글

Comments